Introduction:

Data labeling is a crucial cornerstone of artificial intelligence and machine learning. It is the process through which raw data transforms into actionable insights. It fuels the training and refinement of ML models. Data labeling empowers algorithms to recognize patterns and make predictions. In addition, it drives intelligent decision-making across various industries.

This blog post delves deep into the realm of data labeling. Further, it explores its significance, techniques, challenges, and future directions. Whether you are a seasoned data scientist or an enthusiast curious about the inner workings of AI, this exploration will shed light on the pivotal role data labeling plays in unlocking the true potential of machine learning.

Join us on this journey as we unravel the intricacies of data labeling. And let us discover how it fuels the advancements shaping our AI-driven world.

The Importance of Data Labeling in Machine Learning

Data labeling is the backbone of machine learning. It serves as the foundation upon which accurate and reliable models are built. Labeled data quality directly impacts ML algorithms’ performance and effectiveness. Therefore, its importance cannot be overstated. Let us delve into why data labeling is so crucial in machine learning.

-

Enhancing Model Accuracy:

Labeled data provides ML models with ground truth information. It enables them to learn and generalize patterns accurately. Without proper labeling, models may struggle to differentiate between different classes. And further, they can make incorrect predictions.

-

Enabling Supervised Learning:

In Supervised learning, models are trained on labeled data. Supervised learning relies heavily on accurate data labeling. It allows models to understand relationships between input features and output labels. That leads to more precise predictions.

-

Supporting Training and Validation:

Data labeling is essential during ML model development’s training and validation phases. It ensures that models learn from a diverse and representative dataset. It reduces biases and improves overall performance.

-

Facilitating Decision-Making:

ML models often drive critical decision-making processes in real-world applications. Accurate data labeling ensures that these decisions are based on reliable information. That leads to better outcomes and increased trust in AI systems.

-

Driving Innovation:

Organizations can unlock new opportunities for innovation and insights by effectively labeling data. Labeled datasets can be used to train advanced ML models. That leads to breakthroughs in image recognition, natural language processing, and predictive analytics.

Data labeling is not only a preparatory step in ML model development. It is a fundamental pillar defining AI systems’ success and reliability. Investing in high-quality data labeling processes is crucial to harnessing machine learning’s full potential and driving meaningful impact across industries.

What is Data Labeling?

Data labeling is annotating or tagging data with labels or tags that provide context, meaning, or information about the data. In machine learning and AI, data labeling involves assigning labels or annotations to data points to train and develop ML models. These labels help the ML algorithms understand and interpret the data. They make predictions, classifications, or decisions based on the labeled information.

Data labeling is essential for supervised learning tasks. In which ML models learn from labeled examples to make predictions or infer patterns in new, unseen data. It involves various labeling tasks depending on the type of data and the ML objectives.

The various types of Tags or Labels:

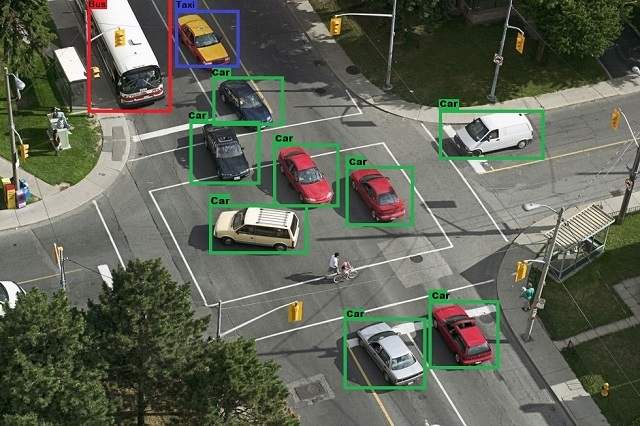

- Image Labeling: Assigning categories, bounding boxes, or segmentation masks to objects in images. It is crucial for object detection, image classification, or semantic segmentation.

- Text Labeling: Tagging text data with categories, entities, sentiment labels, or topic labels. Text labeling is vital for tasks like named entity recognition, sentiment analysis, or text classification.

- Audio Labeling: Annotating audio data with transcriptions, speaker identities, or sound events. It is employed in speech recognition, speaker diarization, or sound classification tasks.

- Video Labeling: Labeling video frames with actions, events, or object tracks. It is useful in tasks like action recognition, activity detection, or video object tracking.

- Structured Data Labeling: This involves labeling structured data with target variables or attributes, such as tabular or time series data. It is important in tasks like regression, forecasting, or anomaly detection.

Data labeling requires domain expertise, annotation guidelines, quality control measures, and validation processes. Employing these expertises ensures accurate, consistent, and reliable labels. It is a crucial step in ML model development. Labeled data quality directly impacts ML models’ performance, accuracy, and generalization capabilities.

Understanding Data Labeling and Its Role

Data labeling is the process of assigning meaningful labels or tags to raw data. The raw data may be images, texts, or sensor data. Labeling makes it understandable and usable by machine learning algorithms. This process transforms raw data into valuable insights and actionable information. Let us delve deeper into understanding data labeling and its crucial role in the machine learning pipeline.

-

Creating Labeled Datasets:

Data labeling involves creating labeled datasets by annotating data points with relevant information. For example, in image classification, each image may be labeled with the objects or categories it contains. Similarly, text classification may label documents with their corresponding topics or sentiments.

-

Training ML Models:

Labeled datasets train machine learning models through supervised learning techniques. During training sessions, models learn from the labeled examples. They adjust their parameters to minimize errors and improve accuracy in predicting unseen data.

-

Improving Model Performance:

The quality and accuracy of data labeling directly impact the performance of ML models. Well-labeled datasets with consistent and informative labels lead to more robust and reliable models. Otherwise, inaccurate or ambiguous labels can introduce biases and errors in the model’s predictions.

-

Enabling Domain-Specific Applications:

Data labeling is tailored to specific domains and applications. It ensures that ML models are trained on relevant and meaningful data. For example, data labeling may involve annotating medical images with diagnostic labels in healthcare. It enables ML models to assist in disease detection and diagnosis.

-

Iterative Process:

Data labeling is often an iterative process. It involves continuous refinement and validation of labels. Human annotators or automated tools may ensure labeling accuracy and consistency in large-scale datasets.

-

Supporting AI Ethics and Fairness:

Ethical considerations like bias mitigation and fairness are critical in data labeling. The diverse and representative labels help to mitigate biases and promote fairness in AI applications. It is fostering trust and transparency.

Understanding data labeling is essential for grasping how machine learning systems learn from data and make informed predictions. It bridges the gap between raw data and actionable insights. Besides, that is shaping the efficacy and ethical integrity of AI-driven solutions.

Benefits of Accurate Data Labeling

Accurate data labeling is a cornerstone of successful machine learning initiatives. Accurate labeling offers a range of benefits that directly contribute to the quality and reliability of ML models. Let us explore the advantages of ensuring precision and correctness in labeling processes.

-

Improved Model Performance:

The primary benefit of accurate data labeling is enhanced model performance. ML models can learn meaningful patterns and relationships when they are trained on accurately labeled datasets. That is leading to more accurate predictions and lower error rates.

-

Higher Prediction Confidence:

Accurate data labeling instills confidence in the predictions made by ML models. Stakeholders and users can trust the model’s outputs. The outputs are based on reliable and well-labeled data.

-

Reduced Bias and Variance:

Accurate labeling helps mitigate biases. In addition, it reduces variance in ML models. Biases can arise from inconsistent or erroneous labels. The bias can lead to skewed predictions. Organizations ensure accuracy in labeling to build fairer and more robust models.

-

Cost and Time Efficiency:

Accurate data labeling can contribute to cost and time efficiency in ML model development. When trained on high-quality labeled data, models require fewer iterations and adjustments. Thereby, it reduces development cycles and associated costs.

-

Enhanced Decision Making:

Organizations rely on ML models for data-driven decision-making. Accurate data labeling ensures that these decisions are based on reliable insights. More accurate labeling leads to better business outcomes and strategic initiatives.

-

Facilitated Model Interpretability:

Accurate labeling also facilitates model interpretability. That allows stakeholders to understand how and why a model makes specific predictions. Clear and precise labels enable transparent communication and informed decision-making.

-

Compliance and Regulatory Alignment:

Accurate data labeling is essential for compliance with regulated industries’ data protection and privacy regulations. Properly labeled data ensures that sensitive information is handled appropriately, reducing compliance risks.

-

Improved User Experience:

Accurate data labeling creates a better user experience for AI-powered applications and services. Users benefit from more relevant and personalized recommendations or insights. That can drive engagement and satisfaction.

Organizations can unlock these significant benefits by prioritizing accuracy in data labeling processes. And that is paving the way for successful and impactful machine learning deployments. It is an investment that yields long-term rewards in terms of model efficacy, stakeholder trust, and business value.

Impact of Quality Data Labels on Machine Learning Models:

The quality of data labels plays a critical role in shaping machine learning models’ performance, reliability, and generalizability. High-quality data labels contribute significantly to the effectiveness and accuracy of ML algorithms. Let us know the impact of quality data labels on machine learning models.

-

Enhanced Model Accuracy:

Quality data labels lead to enhanced model accuracy. It provides clear and precise information about the data. Accurate labels reduce ambiguity and ensure that ML models learn meaningful patterns and relationships. That results in more accurate predictions.

-

Reduced Overfitting and Underfitting:

Overfitting and underfitting are common challenges in ML model development. Quality data labels help mitigate these issues. The quality labels provide a balanced and representative dataset. Models trained on well-labeled data are less likely to overfit to noise or underfit due to insufficient information.

-

Improved Generalization:

Quality data labels contribute to improved model generalization. This allows models to perform well on unseen data or real-world scenarios. Models trained on high-quality labeled datasets can generalize better across different domains. In addition, they can ensure robust performance in diverse environments.

-

Effective Transfer Learning:

Transfer learning, in which knowledge gained from one task is applied to another. Transfer of learning relies on quality data labels for successful adaptation. Well-labeled datasets enable efficient transfer of knowledge and skills between related tasks. That is accelerating model development and deployment.

-

Faster Convergence and Training:

Quality data labels expedite model convergence and training by providing clear optimization objectives. Models trained on accurately labeled data converge faster during training iterations. It reduces computational resources and training time.

-

Increased Robustness:

Quality data labels contribute to the robustness of ML models against noisy or adversarial inputs. Models trained on well-labeled data can handle variations and anomalies in the data more effectively. That is leading to consistent and reliable performance.

-

Facilitated Debugging and Error Analysis:

In the event of model errors or performance issues, the quality data labels facilitate debugging and error analysis. Clear and accurate labels help identify sources of errors. Debugging enables model developers to address issues and improve model performance iteratively.

-

Support for Model Explainability:

Quality data labels support model explainability. And it provides clear input-output relationships. Explainable AI is essential for understanding and interpreting model decisions. The model explainability ensures transparency and trust in AI systems.

Therefore, the impact of quality data labels on machine learning models is profound and far-reaching. Organizations that prioritize generating and maintaining high-quality labeled datasets are poised to unlock the full potential of their ML initiatives. They are driving innovation and delivering impactful solutions.

Data Labeling Approaches

Data labeling approaches vary depending on the type of data and the machine learning task. They also depend on the available resources and the desired level of accuracy. Here are some common data labeling approaches used in machine learning.

-

Manual Data Labeling:

- Description: Human annotators manually label data by reviewing it. They annotate each data sample according to predefined labeling guidelines.

- Use Cases: Used when high accuracy and precision are required. That is especially true for complex tasks like semantic segmentation, named entity recognition, or fine-grained classification.

- Pros: It ensures high-quality annotations. Besides, it allows for nuanced labeling decisions. It is suitable for small to medium-sized datasets.

- Cons: It is time-consuming and labor-intensive. Further, it is expensive. And multiple annotators may be required for validation.

-

Semi-Automated Data Labeling:

- Description: Combines human expertise with automation. In which annotators review and refine automatically generated labels or suggestions from AI models.

- Use Cases: It speeds up the labeling process and reduces manual effort. Further, it is best suited for tasks like data augmentation, pre-labeling, or initial annotation.

- Pros: It improves labeling efficiency and reduces costs. In addition, it leverages AI assistance for faster labeling.

- Cons: Requires validation and quality assurance checks. Besides, it may still require human intervention for accurate labeling.

-

Active Learning:

- Description: Utilizes machine learning models to intelligently select data samples based on uncertainty or informativeness scores for annotation.

- Use Cases: Maximizes learning gain with minimal labeled data. It prioritizes labeling efforts on challenging or informative data points.

- Pros: It efficiently uses resources, reduces labeling costs, and improves model performance with targeted data sampling.

- Cons: Requires initial labeled data for model training. And it may require expertise in active learning algorithms.

-

Crowdsourcing:

- Description: Outsourcing data labeling tasks to a distributed workforce or crowd of annotators through online platforms.

- Use Cases: This is a scalable approach for large datasets and diverse labeling perspectives. It is suitable for tasks like sentiment analysis and data categorization.

- Pros: Scalable, cost-effective, fast turnaround time, access to global annotator pool.

- Cons: Quality control challenges. It may also require post-processing and validation of annotations. It is the potential for inconsistency.

-

AI-Assisted Labeling:

- Description: Leverages AI algorithms like computer vision models or natural language processing models. It automatically generates or suggests labels for data.

- Use Cases: It speeds up labeling process. It reduces manual effort. And it is suitable for tasks like image recognition, and entity recognition.

- Pros: Faster labeling. Reduces human error. Improves labeling consistency.

- Cons: Requires validation and quality assurance checks. And it may require fine-tuning of AI models for accurate labeling.

-

Transfer Learning and Pre-Labeling:

- Description: Transfers knowledge from pre-trained models to new labeling tasks, where pre-labeled data or partially labeled data is used for training.

- Use Cases: It speeds up the labeling process. In addition, it reduces manual effort. Besides, it is suitable for tasks with transferable knowledge or domains.

- Pros: Faster labeling. Leverages existing labeled data. Reduces annotation workload.

- Cons: Requires labeled data for pre-training. It may require fine-tuning for task-specific requirements.

Each data labeling approach has strengths and limitations. The choice of approach depends on factors such as dataset size, complexity of the task, available resources, desired accuracy, and budget constraints. Organizations often combine multiple approaches or use hybrid strategies to optimize data labeling workflows and achieve high-quality labeled datasets for training machine learning models.

How does Data Labeling Work?

Data labeling involves several steps and processes to annotate or tag data with labels or annotations that provide context, meaning, or information about the data. It is typically works in the following ways:

-

Define Labeling Task:

The first step is to define the labeling task based on the ML objectives and the data type. This includes determining the types of labels needed (categories, classes, and entities), defining labeling guidelines, and establishing quality control measures.

-

Collect and Prepare Data:

Relevant data samples are collected and prepared for labeling. This may involve data cleaning, preprocessing, and organizing data into suitable formats for labeling tasks.

-

Annotation Tools:

Data annotators use specialized annotation tools or platforms to label the data. These tools vary based on the labeling task and can include image annotation tools for bounding boxes or segmentation, text annotation tools for named entity recognition or sentiment analysis, audio annotation tools for transcriptions or speaker diarization, etc.

-

Assign Labels:

Annotators review data samples and assign appropriate labels or annotations based on the labeling guidelines. For example, in image labeling, annotators may draw bounding boxes around objects, annotate text with named entities, transcribe audio recordings, or classify data into predefined categories.

-

Quality Assurance:

Quality assurance processes are conducted to ensure labeling accuracy, consistency, and reliability. This may involve multiple annotators validating labels, resolving disagreements, applying consensus rules, and conducting error detection checks to maintain labeling quality.

-

Iterative Improvement:

Feedback loops and iterative improvement cycles refine labeling guidelines. They address labeling challenges and improve labeling quality over time. Annotators provide feedback, corrections, and suggestions for optimizing labeling workflows and enhancing accuracy.

-

Validation and Verification:

Labeled data is validated and verified to ensure it meets quality standards and aligns with the ML objectives. This may involve validation checks, inter-annotator agreement (IAA) assessments, and validation against ground truth or expert-labeled data.

-

Integration with ML Models:

Once data labeling is complete and validated, the labeled data is integrated into ML pipelines for training, validation, and testing of ML models. Labeled data serves as the training dataset that ML algorithms learn from to make predictions, classifications, or decisions on new, unseen data.

-

Monitoring and Maintenance:

Continuous monitoring and maintenance of labeled data are essential to ensure data quality, update labeling guidelines as needed, address drift or changes in data distributions, and adapt labeling processes to evolving ML requirements.

Data labeling involves a collaborative effort between annotators, domain experts, data scientists, and stakeholders to annotate data accurately. They optimize labeling workflows and support the development of robust ML models.

What are Common Types of Data Labeling?

Common types of data labeling depend on the nature of the data and the machine learning task at hand. Here are some of the most common types of data labeling:

-

Image Labeling:

- Bounding Box Annotation: Annotating objects in images by drawing bounding boxes around them. Used for object detection tasks.

- Polygon Annotation: Similar to bounding boxes, it allows for more complex shapes, such as irregular objects.

- Semantic Segmentation: Labeling each pixel in an image with a corresponding class label. They are used for pixel-level object segmentation.

- Instance Segmentation: Similar to semantic segmentation, it distinguishes between different instances of the same class, like multiple cars in an image.

- Landmark Annotation: Identifying and labeling specific points or landmarks in images. It is commonly used in facial recognition or medical imaging.

-

Text Labeling:

- Named Entity Recognition (NER): Tagging entities such as names, organizations, locations, dates, and other entities in text data.

- Sentiment Analysis: Labeling text with sentiment labels (positive, negative, neutral) to analyze the sentiment expressed in text.

- Text Classification: Categorizing text into predefined classes or categories based on content, topic, or intent.

- Intent Recognition: Identifying the intent or purpose behind text data commonly used in chatbots and virtual assistants.

-

Audio Labeling:

- Transcription: Converting spoken audio into text format, often used for speech recognition tasks.

- Speaker Diarization: Labeling segments of audio data with speaker identities. It is distinguishing between different speakers in conversations or recordings.

- Emotion Recognition: Labeling audio with emotion tags (happy, sad, angry) to analyze emotional content in speech.

-

Video Labeling:

- Action Recognition: Labeling actions or activities performed in video frames, such as walking, running, or gestures.

- Object Tracking: Tracking and labeling movement of objects across video frames, commonly used in surveillance or object tracking applications.

- Temporal Annotation: Labeling specific time intervals or video segments, such as events, scenes, or transitions.

-

Structured Data Labeling:

- Regression Labeling: Assigning numerical labels to data points for regression tasks like predicting sales prices, temperatures, or quantities.

- Time Series Labeling: Labeling time series data with target variables or events for forecasting, anomaly detection, or pattern recognition.

- Categorical Labeling: Categorizing structured data into discrete classes or categories based on attributes or features.

-

Other Types:

- Geospatial Labeling: Labeling geospatial data, such as maps, satellite images, or GPS coordinates, for geographic analysis or mapping applications.

- 3D Labeling: Labeling three-dimensional data, such as point clouds, 3D models, or depth maps, for tasks like object detection in 3D scenes or autonomous driving systems.

These are just some common types of data labeling used in various ML and AI applications. The specific type of labeling depends on the data format, task requirements, and desired outcomes of the ML model.

Best Practices for Data Labeling

Here are some best practices for data labeling:

-

Clear Labeling Guidelines:

Develop clear and comprehensive labeling guidelines that define labeling criteria, standards, and quality metrics. Provide annotators with detailed instructions, examples, and edge cases to ensure consistent and accurate labeling.

-

Annotator Training:

Train annotators on labeling guidelines, annotation tools, and quality control procedures. Provide ongoing feedback, coaching, and support to improve labeling accuracy and efficiency.

-

Quality Assurance (QA):

Implement robust QA processes to validate labeled data for accuracy, consistency, and reliability. Use multiple annotators for validation, resolve disagreements, and apply consensus rules to maintain labeling quality.

-

Iterative Improvement:

Continuously iterate on labeling guidelines, address labeling challenges, and incorporate feedback from annotators and stakeholders. Refine labeling workflows, update guidelines as needed, and optimize labeling processes for efficiency and effectiveness.

-

Validation against Ground Truth:

Validate labeled data against ground truth or expert-labeled data to assess labeling accuracy and alignment with ML objectives. Conducted validation checks, inter-annotator agreement (IAA) assessments, and error detection to ensure high-quality labels.

-

Feedback Loops:

Establish feedback loops where annotators provide input, corrections, and suggestions for improving labeling workflows. Encourage open communication. Address annotator concerns and implement changes based on feedback to enhance labeling outcomes.

-

Consistency across Annotators:

Standardize labeling conventions, terminology, and criteria to ensure consistency in labeling decisions across annotators. Use examples, guidelines, and training materials to promote uniformity and reduce labeling variations.

-

Labeling Tools and Automation:

Leverage annotation tools and automation technologies to streamline labeling workflows. Tools reduce manual effort and improve labeling efficiency. Explore AI-assisted labeling, active learning, and semi-automated labeling techniques to enhance productivity and accuracy.

-

Domain Expertise:

Involve domain experts, subject matter specialists, or reviewers in labeling tasks to provide domain-specific knowledge, validate labels, and ensure contextual relevance. Incorporate expert feedback and domain insights to refine labeling guidelines and improve label quality.

-

Ethical Considerations:

Consider ethical implications, biases, and fairness in labeling decisions. Mitigate algorithmic biases, ensure diversity in labeled datasets, and adhere to ethical labeling practices to promote responsible AI development and equitable outcomes.

By following these best practices, organizations can ensure high-quality labeled datasets. They can also optimize ML model training and drive reliable and accurate predictions in AI applications.

Why is Data Labeling Necessary?

Data labeling is necessary for development in the context of ML and AI. The reasons are given below.

-

Supervised Learning:

ML models are trained using labeled data in supervised learning. In which, each data point is associated with a corresponding label or target variable. Data labeling provides the ground truth or correct answers that the model learns from. In addition, it enables the model to make accurate predictions or classifications based on new, unseen data.

-

Model Training:

Labeled data is essential for training ML models across various domains and applications. Whether it is image recognition, natural language processing, speech recognition, or other tasks, labeled data serves as the foundation for teaching ML algorithms to understand patterns, features, and relationships in the data.

-

Algorithm Understanding:

Data labeling helps ML algorithms understand the semantics, context, and meaning of data. By assigning labels representing different classes, categories, or attributes, the algorithms learn to recognize patterns, make associations, and generalize from labeled examples to unseen data.

-

Feature Extraction:

Labeled data assists in feature extraction, where ML models learn to identify relevant features or attributes that contribute to predictions or decisions. Labels guide the model in selecting and weighing predictive or discriminative features for the target task.

-

Performance Evaluation:

Labeled data is crucial for evaluating the performance and accuracy of ML models. By comparing model predictions with the ground truth labels, developers can assess the model’s correctness, precision, recall, and other performance metrics. This enables model refinement and optimization.

-

Generalization and Robustness:

ML models trained on labeled data generalize their learning to new, unseen data instances. Well-labeled data helps models generalize patterns and handle variations. Further, they make reliable predictions for real-world scenarios. Thus, the data ensure robustness and adaptability.

-

Domain Specificity:

Labeled data allows ML models to learn domain-specific knowledge, terminology, and patterns. Labeled data captures domain expertise and nuances critical for accurate predictions and decision-making in fields like healthcare, finance, manufacturing, or natural language processing.

-

Bias Detection and Mitigation:

Data labeling helps detect and mitigate biases in ML models. Developers can address biases and ensure equitable outcomes in AI applications by analyzing labeled data for fairness, diversity, and representativeness.

Data labeling is necessary to train, evaluate, and optimize ML models effectively. In addition, it enables AI systems to make informed decisions, automate tasks, and deliver value across diverse domains and applications.

How Can Data Labeling Be Done Efficiently?

Efficient data labeling involves optimizing workflows. And it leverage automation, and implement best practices to streamline the labeling process while maintaining high-quality annotations. Here are several strategies to achieve efficient data labeling.

-

Clear Labeling Guidelines:

Develop clear and concise labeling guidelines that provide annotators with specific instructions, examples, and criteria for labeling data. Clear guidelines reduce ambiguity. It improves consistency, and helps annotators make accurate labeling decisions efficiently.

-

Annotation Tools:

Use annotation tools and user-friendly and intuitive software platforms. And these tools support efficient labeling workflows. Choose tools with keyboard shortcuts, bulk editing, annotation templates, and collaboration capabilities to enhance productivity.

-

Automation and AI-Assisted Labeling:

Leverage automation and AI-assisted labeling techniques to reduce manual effort and speed up labeling tasks. Use AI algorithms for tasks like pre-labeling, auto-segmentation, object detection, or entity recognition to assist annotators and accelerate labeling workflows.

-

Active Learning:

Implement active learning strategies to intelligently select data samples for annotation. Active learning focuses on informative examples that improve model performance. Use active learning algorithms to prioritize labeling efforts. This reduces labeling costs and maximizes learning gain with minimal labeled data.

-

Semi-Automated Labeling:

Semi-automated labeling approaches combine human expertise with automation. They use tools that allow annotators to review and refine AI-generated labels. Semi-automated labeling corrects errors and provides feedback to improve labeling accuracy efficiently.

-

Parallel Labeling:

In parallel labeling workflows, assign multiple annotators to work on labeling tasks simultaneously. Divide data samples among annotators, establish quality control measures, and merge annotations for consensus to speed up labeling without compromising quality.

-

Quality Assurance (QA) Tools:

Implement QA tools and processes to validate labeled data for accuracy and consistency. Use QA checks, inter-annotator agreement (IAA) assessments, and error detection mechanisms to efficiently identify and resolve labeling errors.

-

Iterative Improvement:

Continuously iterate on labeling workflows, guidelines, and tools based on feedback and learning from labeling tasks. Incorporate annotator input and address labeling challenges. The iterative improvement optimizes processes to improve efficiency and labeling quality over time.

-

Training and Onboarding:

Provide comprehensive training and onboarding for annotators to familiarize them with labeling guidelines, tools, and QA procedures. Offer ongoing support, coaching, and resources to empower annotators and enhance their efficiency in labeling tasks.

-

Optimize Workflows:

Analyze and optimize labeling workflows to identify bottlenecks, streamline processes, and reduce redundant tasks. Use workflow analytics, performance metrics, and process optimization techniques to improve efficiency and throughput in data labeling.

Adopt these strategies and leveraging technology-driven solutions. By doing so, organizations can achieve efficient data labeling workflows. Further they can increase productivity, and reduce labeling costs. In addition, they deliver high-quality labeled datasets for machine learning and AI applications.

Data Labeling Process

The data labeling process involves several steps and considerations to annotate or tag data with labels, attributes, or annotations. That provides context, meaning, or information for ML and AI applications. Here is a detailed overview of the data labeling process:

-

Define Labeling Task:

- Identify the ML objectives and the data type to be labeled (images, text, audio, video, structured data).

- Define the specific labeling task based on ML requirements (object detection, named entity recognition, sentiment analysis, and transcription).

-

Collect and Prepare Data:

- Gather relevant data samples that represent the target domain, diversity of scenarios, and variations in data.

- Preprocess and clean data by removing noise, duplicates, outliers, and irrelevant information.

- Organize data into suitable formats for labeling tasks, such as images, text documents, audio recordings, or structured datasets.

-

Labeling Guidelines:

- Develop comprehensive labeling guidelines defining labeling criteria, standards, and quality metrics.

- Specify labeling instructions, annotation types, labeling conventions, and data format requirements.

- Provide examples, edge cases, and guidelines for handling ambiguity or complex labeling scenarios.

-

Annotation Tools:

- Choose appropriate annotation tools or platforms based on the labeling task and data format.

- Use annotation tools that support the required annotation types (bounding boxes, polygons, text tags, audio transcriptions).

- Ensure annotation tools are user-friendly and intuitive and facilitate efficient labeling workflows.

-

Assign Labels:

- Annotators review data samples and assign appropriate labels, attributes, or annotations based on the labeling guidelines.

- Perform labeling tasks such as drawing bounding boxes around objects in images, tagging named entities in text, transcribing audio, or annotating events in video.

-

Quality Assurance (QA):

- Implement robust QA processes to validate labeled data for accuracy, consistency, and reliability.

- Use multiple annotators for validation, resolve disagreements, and apply consensus rules to maintain labeling quality.

- Conduct QA checks, inter-annotator agreement (IAA) assessments, and error detection to ensure high-quality labels.

-

Validation Against Ground Truth:

- Validate labeled data against ground truth or expert-labeled data to assess labeling accuracy and alignment with ML objectives.

- Compare annotated data with reference data, perform validation checks, and verify annotations for correctness and completeness.

-

Iterative Improvement:

- Gather feedback from annotators, domain experts, and stakeholders to identify areas for improvement in labeling guidelines and processes.

- Iterate on labeling workflows. Address labeling challenges. And incorporate feedback to enhance labeling accuracy and efficiency.

-

Integration with ML Models:

- Once labeled data is validated, integrate it into ML pipelines for training, validation, and testing of ML models.

- Labeled data will be used as the training dataset to train ML algorithms. Evaluate model performance. And make predictions or classifications on new, unseen data.

-

Monitoring and Maintenance:

- Continuously monitor and maintain labeled data to ensure data quality. Update labeling guidelines as needed. And address drift or changes in data distributions.

- Monitor model performance. Analyze feedback loops. And adapt labeling processes to evolving ML requirements and data challenges.

The data labeling process requires collaboration among annotators, domain experts, data scientists, and stakeholders to annotate data accurately. These processes optimize labeling workflows and support the development of robust ML models for AI applications.

What Factors Affect the Quality of Data Labeling?

Various factors can influence the quality of data labeling. Those factors can impact labeled datasets’ accuracy, consistency, and reliability. Here are key factors that can affect the quality of data labeling:

-

Labeling Guidelines:

- Clear and comprehensive labeling guidelines are essential for annotators to understand the labeling task, criteria, standards, and annotation types.

- Ambiguous or vague guidelines can lead to inconsistent labeling. And that may lead to misinterpretation of labeling requirements, and errors in annotations.

-

Annotation Tools:

- The choice of annotation tools or platforms can impact labeling quality. These tools are user-friendly and have features like annotation templates, keyboard shortcuts, and validation checks. They can enhance accuracy and efficiency.

- Inadequate or cumbersome annotation tools may lead to errors, inefficiencies, and inconsistencies in labeling tasks.

-

Annotator Expertise:

- Annotators’ expertise, training, and experience play a crucial role in labeling quality. The knowledgeable annotators can make accurate labeling decisions. They can handle complex labeling tasks. And they adhere to labeling guidelines.

- Lack of expertise or training may result in inaccurate annotations. In addition, a lack of experience may lead to misinterpretation of data and inconsistencies among annotators.

-

Quality Assurance (QA) Processes:

- Implementing robust QA processes, such as validation checks, inter-annotator agreement (IAA) assessments, and error detection mechanisms, is essential to validating labeled data, identifying discrepancies, and ensuring labeling accuracy.

- Inadequate QA procedures can lead to undetected labeling errors, inconsistencies, and compromised labeling quality.

-

Consensus and Disagreements:

- It resolves disagreements among annotators through consensus-building mechanisms like majority voting, adjudication, or expert review. It is critical to maintaining labeling consistency and accuracy.

- Failure to address disagreements can result in conflicting annotations, reduced reliability, and compromised model performance.

-

Data Complexity and Ambiguity:

- Complex or ambiguous data samples, like ambiguous text, overlapping objects in images, or unclear boundaries in segmentation tasks, pose challenges for annotators and can lead to labeling errors.

- Providing clear guidelines, examples, and training on handling ambiguity is important to mitigate labeling challenges and improve accuracy.

-

Data Diversity and Representation:

- Ensuring diversity and representation in labeled datasets is crucial to avoid biases and generalize models. This can also enhance model robustness across different scenarios, demographics, and use cases.

- Biased or unrepresentative datasets can lead to biased models, limited generalization, and performance issues in real-world applications.

-

Iterative Feedback and Improvement:

- Incorporating feedback from annotators, domain experts, and stakeholders and iterating on labeling workflows, guidelines, and tools based on learnings from labeling tasks is essential for continuous improvement and enhancing labeling quality over time.

- A lack of feedback mechanisms and iterative improvements can result in stagnant labeling practices, missed opportunities for optimization, and suboptimal labeling quality.

Addressing these factors and implementing best practices in data labeling workflows can improve the quality, accuracy, and reliability of labeled datasets. This leads to better-performing machine learning models and AI-driven solutions.

Techniques and Tools for Effective Data Labeling

Adequate data labeling is essential for training accurate and reliable machine learning models. Various techniques and tools are available to streamline the data labeling process. These tools and techniques ensure efficiency, consistency, and quality. Let us explore some important techniques and tools for effective data labeling.

-

Manual Labeling:

- Human Annotation: Involves human annotators manually labeling data points based on predefined criteria or guidelines. Human annotation ensures high accuracy and nuanced labeling. That is especially true for complex tasks requiring domain expertise.

- Crowdsourcing Platforms: Amazon Mechanical Turk, CrowdFlower, and Labelbox facilitate crowdsourced labeling. They are leveraging a large pool of annotators to label data at scale. Crowdsourcing is cost-effective and accelerates labeling for large datasets.

-

Semi-Automated Labeling:

- Active Learning: Active learning algorithms identify the most informative data points for labeling. Active learning optimizes annotation efforts. Models actively request labels for data points that are most uncertain or likely to improve model performance. In addition, it reduces labeling costs and time.

- Weak Supervision: Weak supervision techniques automatically generate initial labels automatically using heuristics, rules, or noisy labels. These labels are then refined through human validation. It is improving efficiency while maintaining labeling accuracy.

-

Automated Labeling:

- Rule-based Labeling: Rule-based labeling uses predefined rules or patterns to assign labels to data points automatically. It is efficient for straightforward tasks. However, it may lack the nuance and context provided by human annotators.

- Machine Learning-based Labeling: ML algorithms such as active learning or deep learning models can be trained to label data based on patterns and features automatically. These models require initial training data and human validation. However, machine learning-based labeling can significantly speed up labeling for large datasets.

-

Annotation Tools and Platforms:

- Labeling Tools: Tools like LabelImg, LabelMe, and CVAT provide graphical interfaces for annotators to label images with bounding boxes, polygons, or keypoints. They offer annotation features tailored to specific data types and annotation requirements.

- Text Annotation Platforms: Prodigy, Brat, and Tagtog specialize in text annotation. They enable annotators to label text data for tasks such as named entity recognition, sentiment analysis, or document classification.

- End-to-End Labeling Platforms: Comprehensive platforms like Labelbox, Supervisely, and Scale AI offer end-to-end solutions for data labeling. They include project management, collaboration, quality control, and integration with ML pipelines.

-

Quality Control and Validation:

- Inter-Annotator Agreement (IAA): IAA measures the consistency and agreement among multiple annotators labeling the same data. It helps identify discrepancies and ensure labeling accuracy and reliability.

- Validation Checks: Automated validation checks, such as label consistency checks, outlier detection, and data distribution analysis, help maintain labeling quality and flag potential errors or inconsistencies.

By using a combination of manual, semi-automated, and automated labeling techniques, robust annotation tools, and quality control measures, organizations can achieve effective data labeling for training high-performance machine learning models. Tailoring labeling approaches to specific data types, tasks, and project requirements optimizes labeling efficiency and model accuracy.

Manual Data Labeling

Manual data labeling is a fundamental approach that involves human annotators meticulously labeling data points based on predefined criteria or guidelines. This method is widely used for tasks requiring human expertise, nuanced understanding, and accuracy. Let us explore the critical aspects of manual data labeling and its effectiveness in training machine learning models.

-

Human Annotation Process:

- Data Understanding: Annotators begin by understanding the data and the labeling task. They familiarize themselves with labeling guidelines, class definitions, and specific instructions for accurate labeling.

- Labeling Tools: Annotators use labeling tools or platforms designed for manual annotation. Some of the tools are such as LabelImg, LabelMe, or custom-built annotation interfaces. These tools offer functionalities like drawing bounding boxes, polygons, keypoints, or labeling text data.

- Labeling Consistency: It is crucial to ensure consistency across annotators. Guidelines, examples, and regular training sessions help maintain consistent labeling standards and reduce discrepancies.

-

Domain Expertise and Contextual Understanding:

- Subject Matter Experts (SMEs): In tasks requiring domain-specific knowledge, SMEs play a vital role in accurate labeling. They provide insights, validate labels, and ensure that annotations reflect the nuances and complexities of the data.

- Contextual Understanding: Human annotators bring contextual understanding to the labeling process. They can interpret subtle features, variations, and contextual clues that may impact the labeling decision. Human annotators enhance the quality of labeled data.

-

Complex Annotation Tasks:

- Image Annotation: For tasks like object detection, segmentation, or image classification, annotators draw bounding boxes, outline regions of interest, or label specific image attributes.

- Text Annotation: In natural language processing tasks, annotators label text data for named entity recognition, sentiment analysis, text classification, or semantic annotation.

-

Quality Control and Validation:

- Inter-Annotator Agreement (IAA): Comparing labels among multiple annotators helps measure agreement and consistency. IAA metrics like Cohen’s kappa or Fleiss’ kappa quantify labeling agreement. It is guiding quality control efforts.

- Regular Reviews and Feedback: Continuous review, feedback, and validation loops ensure labeling accuracy and address inconsistencies or errors. Feedback mechanisms improve annotator performance and maintain labeling quality over time.

-

Scalability and Flexibility:

- Scalability: Manual labeling can be scaled by employing teams of annotators. It leverages crowdsourcing platforms or implements workflow optimizations.

- Flexibility: Manual labeling allows for flexibility in handling diverse data types, complex labeling tasks, and evolving requirements. Annotators can adapt to new guidelines, updates, or changes in labeling criteria.

Manual data labeling is labor-intensive in nature. However, it remains indispensable for tasks demanding human judgment, context, and expertise. Manual data labeling yields high-quality labeled datasets when executed with precision, consistency, and quality control measures. Those high-quality labeled datasets are essential for training accurate and reliable machine learning models.

Semi-Automated Labeling

Semi-automated data labeling combines human expertise with automation. It is leveraging the strengths of both approaches to streamline the labeling process and improve efficiency. This hybrid approach is particularly effective for tasks where manual labeling is resource-intensive, but human judgment and validation are still essential. Let us explore the critical aspects of semi-automated data labeling and its benefits in training machine learning models.

-

Active Learning Techniques:

- Uncertainty Sampling: Active learning algorithms identify data points the model is uncertain about or where additional labeling would improve model performance. Annotators focus on these critical data points. They are optimizing labeling efforts and improving model accuracy.

- Query Strategies: Strategies like margin sampling, entropy-based sampling, or diversity sampling guide annotators to label informative and diverse examples. These query strategies ensure comprehensive model training with minimal labeling redundancy.

-

Weak Supervision Methods:

- Heuristic Rules: Weak supervision uses heuristic rules, patterns, or simple heuristics to assign initial labels to data points automatically. While these labels may be noisy or imperfect, they serve as starting points for human validation and refinement.

- Noisy Label Handling: Techniques like noise-aware training or label cleaning algorithms identify and correct noisy labels in semi-supervised settings. Thereby, they are improving label quality and model robustness.

-

Human-in-the-Loop Labeling:

- Interactive Labeling Interfaces: Semi-automated tools provide interactive interfaces where annotators can collaborate with machine learning models. Annotators validate model predictions, correct errors, and provide feedback. They are improving model performance iteratively.

- Model Feedback Loops: Models trained on partially labeled data provide feedback to annotators. They are highlighting areas of uncertainty or potential labeling errors. This feedback loop enhances labeling accuracy and guides annotators to focus on challenging data points.

-

Efficiency and Cost Savings:

- Reduced Labeling Efforts: Semi-automated approaches reduce manual labeling efforts by focusing on informative data points or leveraging automated labeling techniques. Annotators prioritize critical areas. They are optimizing resource allocation and reducing labeling redundancy.

- Cost-Effective Scaling: Semi-automated labeling enhances scalability. It allows organizations to handle large datasets efficiently without compromising labeling quality. Crowdsourcing platforms and active learning strategies further facilitate cost-effective scaling.

-

Quality Assurance and Validation:

- Human Oversight: Despite automation, human oversight and validation remain crucial. Annotators validate automated labels, correct errors, and ensure consistency and accuracy.

- Quality Control Measures: Regular validation checks, inter-annotator agreement analysis, and feedback mechanisms maintain labeling quality and address any discrepancies or labeling biases.

Semi-automated labeling integrates automation with human expertise. It optimizes the labeling process, accelerates model development, and improves overall labeling quality. It strikes a balance between efficiency and accuracy. Thus, this makes it a valuable approach for effectively training machine learning models.

Automated Labeling

Automated data labeling leverages machine learning algorithms and their computational techniques to automatically assign labels to data points. Labels are assigned to the data points without direct human intervention. This approach efficiently handles large volumes of data and repetitive labeling tasks. It is accelerating the data preparation process for machine learning model training. Let us discuss the key aspects and benefits of automated data labeling:

-

Rule-based Labeling:

- Predefined Rules: Automated labeling systems use predefined rules, patterns, or heuristics to assign labels to data points based on specific criteria. For example, rules may classify emails as spam or non-spam based on keywords or patterns in text classification.

- Simple Labeling Tasks: Rule-based labeling is effective for straightforward tasks with clear decision boundaries. In straightforward tasks, manual intervention is not required for label assignment.

-

Machine Learning-based Labeling:

- Supervised Learning Models: Machine learning algorithms, such as classification or clustering models, are trained on labeled data to predict labels for unlabeled data points. These models learn from labeled examples and generalize to unseen data.

- Active Learning: Active learning algorithms iteratively train models using feedback from labeled data points. They prioritize uncertain or informative data points for labeling. Machine learning based labeling improves model accuracy with minimal human effort.

-

Text Annotation:

- Named Entity Recognition (NER): Automated NER systems identify and classify entities (names, locations, organizations) in text data without manual annotation. NER models leverage linguistic features, context, and patterns to extract entities accurately.

- Sentiment Analysis: Automated sentiment analysis tools classify text sentiments (positive, negative, neutral) based on language patterns, sentiment lexicons, and machine learning models. These tools are used in social media monitoring, customer feedback analysis, and opinion mining.

-

Image and Video Annotation:

- Object Detection: Automated object detection algorithms identify and localize objects within images or videos. Object detection assigns bounding boxes or segmenting object regions. Deep learning models like YOLO (You Only Look Once) or Faster R-CNN automate object detection tasks.

- Facial Recognition: Automated facial recognition systems detect and recognize faces in images or videos. They enable applications like identity verification, surveillance, or access control.

-

Benefits of Automated Labeling:

- Scalability: Automated labeling scales efficiently to handle large datasets. It reduces the manual effort and time required for labeling tasks.

- Consistency: Automated labeling ensures consistent labeling decisions across data points. Thus, it minimizes labeling discrepancies and errors.

- Cost Savings: By automating repetitive labeling tasks, organizations save on manual annotation efforts and resource allocation costs.

- Speed and Efficiency: Automated labeling accelerates the data preparation process. And it is enabling faster model development and deployment.

-

Challenges and Considerations:

- Data Quality: Automated labeling relies on the quality of training data and model accuracy. It ensures high-quality training data. In addition, they validate model outputs that are essential for reliable automated labeling.

- Complex Tasks: Complex labeling tasks require contextual understanding, domain expertise, or subjective judgment, and they may still require human annotation or validation.

Automated data labeling is a powerful tool for streamlining data preparation in machine learning projects. It offers scalability, consistency, and efficiency benefits. Further, it excels in certain tasks. Besides, human oversight and validation remain crucial for ensuring labeling accuracy and addressing challenges in complex labeling scenarios.

Manual vs. Automated Data Labeling

Each method’s Manual and automated data labeling offer distinct advantages and considerations. Both are catering to different labeling needs, complexities, and resource constraints. Understanding the differences between these approaches is crucial for choosing the most suitable method for data labeling tasks. Let us compare manual and automated data labeling methods across various dimensions:

-

Accuracy and Precision:

- Manual Labeling: Human annotators can provide precise and accurate labels. Complex tasks, especially, require domain expertise or nuanced understanding. However, labeling consistency and inter-annotator agreement may vary.

- Automated Labeling: Automated methods offer consistency and precision in label assignment. It ensures uniformity across data points. However, accuracy depends on the quality of training data, model performance, and the complexity of labeling tasks.

-

Scalability and Efficiency:

- Manual Labeling: Manual labeling can be time-consuming and resource-intensive for large datasets or repetitive tasks. Scaling manual labeling efforts requires additional human annotators and coordination.

- Automated Labeling: Automated methods excel in scalability and efficiency. They can handle large volumes of data quickly and consistently. They reduce manual effort and accelerate labeling workflows. In addition, they are suitable for high-throughput labeling tasks.

-

Complexity of Labeling Tasks:

- Manual Labeling: Manual labeling is effective for tasks requiring human judgment, contextual understanding, or subjective interpretation. It suits semantic segmentation, sentiment analysis, or qualitative data labeling tasks.

- Automated Labeling: Automated methods are efficient for straightforward labeling tasks with clear decision boundaries, such as object detection, named entity recognition, or binary classification. However, they may struggle with tasks requiring nuanced interpretation or domain expertise.

-

Cost Considerations:

- Manual Labeling: Manual labeling can be costly due to labor expenses. It is more expensive for large datasets or specialized tasks requiring skilled annotators. It also requires ongoing training, supervision, and quality control efforts.

- Automated Labeling: Automated methods offer cost savings by reducing manual effort and resource allocation. However, initial setup costs, model development, and validation efforts are required. Complex automated labeling systems may also incur infrastructure and maintenance costs.

-

Labeling Consistency and Quality Control:

- Manual Labeling: Ensures labeling consistency. Quality control is a challenge in manual labeling, as it relies on human judgment and interpretation. Inter-annotator agreement metrics, regular reviews, and feedback loops help maintain labeling quality.

- Automated Labeling: Automated methods provide consistent labeling decisions. It reduces variability and errors. Quality control measures focus on validating model outputs, assessing label accuracy, and handling noisy or incorrect labels generated by automated systems.

-

Flexibility and Adaptability:

- Manual Labeling: Manual labeling offers flexibility and adaptability to evolving labeling criteria, changes in task requirements, or updates in labeling guidelines. Human annotators can adjust to complex or dynamic labeling scenarios.

- Automated Labeling: Automated methods are less flexible in handling complex or dynamic labeling tasks that require human judgment or contextual understanding. They are suitable for repetitive, well-defined tasks with static labeling criteria.

The choice between manual and automated data labeling methods depends on the specific requirements, complexity, scalability, budget, and desired level of accuracy and efficiency for a given labeling task. Hybrid approaches, combining manual validation with automated labeling, can also leverage the strengths of both methods to optimize labeling workflows and ensure high-quality labeled datasets for machine learning model training.

Large language Models Data Labeling

In several ways, large language models such as GPT-3 can be used for data labeling tasks in NLP and text-related labeling tasks. Here is how large language models can be leveraged for data labeling:

-

Named Entity Recognition (NER):

- Large language models can be fine-tuned to perform named entity recognition tasks. In these tasks, they can identify and label entities such as names, organizations, locations, dates, and others in text data.

- It trains the language model on annotated data and fine-tunes it using techniques like transfer learning. It can learn to recognize and label entities accurately in new text inputs.

-

Sentiment Analysis:

- Language models like GPT-3 can be used for sentiment analysis tasks. In these tasks, they analyze text data to determine the sentiment (positive, negative, neutral) expressed in the text.

- It trains the model on labeled sentiment analysis datasets and fine-tunes it for specific domains or contexts. In addition, it can efficiently classify text into sentiment categories.

-

Text Classification:

- Large language models can be utilized for text classification tasks. They categorize text into predefined classes or categories based on content, topic, or intent.

- They train the language model on labeled text classification datasets and fine-tune it with domain-specific data, enabling it to classify text accurately and efficiently.

-

Intent Recognition:

- Language models like GPT-3 can be employed for intent recognition tasks. They identify the intent or purpose behind text data. They are commonly used in chatbots and virtual assistants.

- They train the model on annotated intent recognition datasets and fine-tune it for specific intents or domains. The model can recognize user intents and respond accordingly.

-

Data Augmentation:

- Large language models can generate synthetic data or augment existing labeled datasets by generating variations of labeled data points.

- Using techniques like paraphrasing, text generation, or context expansion, language models can create diverse data samples that can be used to augment labeled datasets and improve model robustness.

-

Error Detection and Correction:

- Language models can assist in error detection and correction tasks. They can identify errors or inconsistencies in labeled data and suggest corrections.

- They analyze labeled data and compare it with model predictions. Language models can highlight labeling errors, inconsistencies, or missing annotations for review and correction.

-

Active Learning and Data Sampling:

- Large language models can be used for active learning strategies. They intelligently select data samples for annotation based on uncertainty or informativeness scores.

- Leveraging model predictions and confidence scores can guide data sampling strategies. Prioritize labeling efforts and maximize learning gain with minimal labeled data.

-

Quality Assurance (QA) and Validation:

- Language models can assist in quality assurance and validation of labeled data by comparing model predictions with ground truth labels. Performing inter-annotator agreement checks, and detecting labeling errors.

- Language models can analyze model predictions to identify discrepancies, validate label correctness, and ensure labeling quality in annotated datasets.

Large language models like GPT-3 can be valuable tools for data labeling tasks in NLP and text-related domains. They offer capabilities such as named entity recognition, sentiment analysis, text classification, intent recognition, data augmentation, error detection, active learning, and quality assurance/validation. Integrating these models into data labeling workflows can enhance efficiency and accuracy. They are scalable in labeled data generation for ML and AI applications.

Computer Vision Data Labeling

Computer vision data labeling involves annotating images or video frames with labels, bounding boxes, segmentation masks, keypoints, or other annotations. They provide context and information for training machine learning models in computer vision tasks. Here is an overview of computer vision data labeling:

-

Types of Annotations:

- Bounding Boxes: Annotating objects in images with rectangular bounding boxes to localize and identify objects of interest.

- Semantic Segmentation: Labeling each pixel in an image with a corresponding class label to segment objects and backgrounds.

- Instance Segmentation: Similar to semantic segmentation, it distinguishes between different instances of the same class (multiple cars in an image).

- Keypoint Annotation: Identifying and labeling specific points or key points on objects (human pose estimation, facial key points).

- Polygons and Polylines: Drawing polygons or polylines around objects or regions of interest for detailed annotations.

- Object Tracking: Labeling object tracks across video frames to track movement and behavior over time.

-

Annotation Tools:

- Use specialized annotation tools or platforms designed for computer vision tasks, such as LabelImg, LabelMe, VGG Image Annotator, CVAT, or custom-built annotation tools.

- Choose annotation tools that support the required annotation types. These tools offer annotation guidelines. In addition, they facilitate efficient labeling workflows.

-

Annotation Process:

- Define labeling guidelines and criteria for annotators. The annotation process includes annotation types, labeling conventions, quality standards, and data format requirements.

- Provide annotators with training and guidelines to ensure annotation consistency, accuracy, and quality.

- Annotate images or video frames using annotation tools. This process follows the labeling guidelines and criteria specified.

-

Quality Assurance (QA):

- Implement QA processes to validate labeled data for accuracy, completeness, and consistency.

- Multiple annotators are used for validation to resolve disagreements. Quality assurance applies consensus rules to maintain labeling quality.

- QA conducts QA checks and inter-annotator agreement (IAA) assessments. In addition, they perform error detection to ensure high-quality annotations.

-

Data Augmentation:

- Use data augmentation techniques to create variations of labeled data samples like rotations, flips, scaling, brightness adjustments, or adding noise.

- Augmenting labeled data enhances model robustness, generalization, and performance. They are exposing models to diverse data variations.

-

Specialized Tasks:

- It can be used in specific computer vision tasks, such as object detection, image segmentation, or facial recognition. It tailors annotation approaches and tools to the task requirements.

- Incorporate domain-specific knowledge, guidelines, and best practices for accurate and meaningful annotations in specialized tasks.

-

Model Training and Evaluation:

- Labeled data can be used as the training dataset for machine learning models in computer vision tasks like object detection, image classification, or image segmentation.

- Evaluate model performance using validation datasets with ground truth labels. It assesses model accuracy, precision, recall, and other performance metrics.

-

Continuous Improvement:

- Continuously iterate on labeling workflows, guidelines, and tools based on feedback and learnings from labeling tasks.

- Gather feedback from annotators, domain experts, and stakeholders to identify areas for improvement and optimize labeling processes.

Computer vision data labeling requires attention to detail. It needs expertise in annotation techniques. Besides, it needs the use of specialized annotation tools and adherence to labeling guidelines. Further, they undergo quality assurance processes and continuous improvement efforts to generate high-quality labeled datasets for training robust machine learning models in computer vision applications.

Natural Language Processing Data Labeling

Natural Language processing data labeling involves annotating text data with labels, tags, entities, sentiment labels, or other annotations. It provides context, structure, and information for training machine learning models in NLP tasks. Here is an overview of the NLP data labeling process:

-

Types of Annotations:

- Named Entity Recognition (NER): Labeling entities such as names, organizations, locations, dates, and other entities in text data.

- Sentiment Analysis: Tagging text with sentiment labels (positive, negative, neutral) to analyze sentiment expressed in text.

- Text Classification: Categorizing text into predefined classes or categories based on content, topic, or intent.

- Intent Recognition: Identifying the intent or purpose behind text data commonly used in chatbots and virtual assistants.

- Part-of-Speech (POS) Tagging: Labeling words with their grammatical categories (noun, verb, adjective) in text.

-

Annotation Tools:

- Use annotation tools or platforms designed for NLP tasks, such as Prodigy, Label Studio, Brat, or custom-built annotation tools.

- Choose tools that support the required annotation types (NER, sentiment analysis, text classification). These tools offer annotation guidelines. Further, they facilitate efficient labeling workflows.

-

Annotation Process:

- Define labeling guidelines and criteria for annotators. That includes annotation types, labeling conventions, quality standards, and data format requirements.

- Provide annotators with training and guidelines to ensure annotation consistency, accuracy, and quality.

- Annotate text data using annotation tools. They are following the labeling guidelines and criteria specified.

-

Quality Assurance (QA):

- Implement QA processes to validate labeled data for accuracy, completeness, and consistency.

- Use multiple annotators for validation, resolve disagreements, and apply consensus rules to maintain labeling quality.

- Conduct QA checks, inter-annotator agreement (IAA) assessments, and error detection to ensure high-quality annotations.

-

Data Augmentation:

- Use data augmentation techniques, such as paraphrasing, text augmentation, or adding noise, to create variations of labeled data samples.

- Augmenting labeled data enhances model robustness, generalization, and performance by exposing models to diverse data variations.

-

Specialized Tasks:

- For specific NLP tasks like sentiment analysis, entity recognition, or text summarization, tailor annotation approaches and tools to the task requirements.

- Incorporate domain-specific knowledge, guidelines, and best practices for accurate and meaningful annotations in specialized NLP tasks.

-

Model Training and Evaluation:

- Labeled data can be used as the training dataset for machine learning models in NLP tasks like NER, sentiment analysis, or text classification.

- Evaluate model performance using validation datasets with ground truth labels; assess model accuracy, precision, recall, F1 score, and other performance metrics.

-

Continuous Improvement:

- Continuously iterate on labeling workflows, guidelines, and tools based on feedback and learnings from labeling tasks.

- Gather feedback from annotators, domain experts, and stakeholders to identify areas for improvement and optimize labeling processes.

NLP data labeling requires expertise in linguistic analysis and annotation techniques. In addition, it involves the use of specialized annotation tools and adherence to labeling guidelines. Besides, it must employ quality assurance processes and continuous improvement efforts to generate high-quality labeled datasets for training robust machine learning models in NLP applications.

Popular Labeling Tools and Platforms

Data labeling tools and platforms are crucial in streamlining the data labeling process. These tools enhance efficiency and ensure labeling accuracy for machine learning model training. Several popular tools and platforms offer a range of features tailored to different data types, labeling tasks, and project requirements. Let us explore some of the widely used data labeling tools and platforms:

-

LabelImg:

- Features: LabelImg is an open-source tool for annotating images with bounding boxes, polygons, and keypoints. It supports common image formats. In addition, it provides an intuitive graphical interface for annotators.

- Use Cases: LabelImg is famous for object detection tasks. In which, annotators label objects within images by drawing bounding boxes or outlining object regions.

-

LabelMe:

- Features: LabelMe is a web-based platform for annotating images with polygons, keypoints, and segmentations. It supports collaborative labeling and project management. It is exporting labeled data in various formats.

- Use Cases: LabelMe is suitable for semantic segmentation tasks. Annotators label image regions with semantic classes or attributes.

-

CVAT (Computer Vision Annotation Tool):

- Features: CVAT is a versatile annotation tool that supports multiple annotation types, including bounding boxes, polygons, cuboids, and semantic segmentation. It offers automation features and collaborative labeling. Further, it provides project management functionalities.

- Use Cases: CVAT is used for diverse computer vision tasks such as object detection, image segmentation, video annotation, and multi-frame tracking.

-

Labelbox:

- Features: Labelbox is an end-to-end data labeling platform with a user-friendly interface. It has automation capabilities. And it can integrate with machine learning workflows. It supports various annotation types, quality control measures, and team collaboration.

- Use Cases: Labelbox is suitable for scalable data labeling projects, and automation, scalability, and project management are essential.

-

Supervisely:

- Features: Supervisely is an AI-powered platform offering automated labeling, model training, and deployment capabilities. It supports object detection, semantic segmentation, image classification, and data analysis.

- Use Cases: Supervisely is used for end-to-end machine learning projects, from data labeling to model development and deployment, especially in computer vision applications.

-

Tagtog:

- Features: Tagtog is a text annotation platform specializing in named entity recognition (NER), sentiment analysis, and text classification. It offers collaborative annotation, active learning, and integration with NLP workflows.

- Use Cases: Tagtog is popular for text data labeling tasks in natural language processing, such as entity extraction, sentiment labeling, and document classification.

-

Scale AI:

- Features: Scale AI is a comprehensive data labeling platform offering human-in-the-loop labeling, quality control, and model validation services. It supports various data types, annotation types, and industry-specific labeling workflows.

- Use Cases: Scale AI is utilized across industries for high-quality data labeling projects. It supports various industries, from computer vision tasks to natural language processing and sensor data annotation.

These popular data labeling tools and platforms cater to diverse labeling needs. They provide a range of annotation types, automation capabilities, collaboration features, and integration with machine learning pipelines. The right tool depends on project requirements, data complexity, labeling scale, and budget. In addition, users need to select the desired functionalities for efficient and accurate data labeling.

Best Practices for Consistent and Reliable Data Labeling

Consistent and reliable data labeling is essential for training accurate and effective machine learning models. Adopting best practices ensures labeling quality, reduces errors, and improves the overall efficacy of ML solutions. Here are some key best practices for consistent and reliable data labeling:

-

Clear Labeling Guidelines:

- Develop clear and comprehensive labeling guidelines outlining labeling criteria, definitions, and standards.

- Include examples, edge cases, and guidelines for handling ambiguous or challenging data points.

- Ensure labeling guidelines are accessible to annotators and regularly updated based on feedback and task requirements.

-

Training and Onboarding:

- Provide thorough training to annotators on labeling guidelines, annotation tools, and quality standards.

- Conduct onboarding sessions to familiarize annotators with project objectives, data context, and labeling expectations.

- Offer continuous education and feedback to improve annotator skills and consistency.

-

Quality Control Measures:

- Implement quality control mechanisms like inter-annotator agreement (IAA) checks, validation workflows, and error detection tools.

- Regularly review labeled data, assess labeling accuracy, and address inconsistencies or errors promptly.

- Establish feedback loops for annotators, validators, and supervisors to address labeling challenges and improve quality iteratively.

-

Consistency Across Annotators:

- Ensure labeling consistency by aligning annotators on labeling standards, terminology, and interpretation.