In this blog post let us discuss the following: Latest trends in CPU microarchitecture design, How CPU microarchitecture impact on performance and Future developments in it.

Introduction

What is CPU Microarchitecture?

CPU microarchitecture refers to the internal structure and organization of a processor. CPU microarchitecture defines the processor; how to execute instructions and processes data. In addition, it defines how to interact with memory and other hardware components. It is the underlying design that determines a CPU’s efficiency, speed, and overall performance.

Key Components of CPU Microarchitecture

Modern microarchitecture consists of several fundamental components:

- Instruction Fetch and Decode Units – These components retrieve instructions from memory. Further, these components decode them into micro-operations for execution.

- Execution Units (ALUs & FPUs) – The Arithmetic Logic Unit (ALU) handles integer operations. The Floating-Point Unit (FPU) processes decimal calculations.

- Pipelines – CPUs use pipelining to break instructions into stages. Pipelines allow multiple instructions to be processed simultaneously. Pipelines improve efficiency.

- Cache Memory – High-speed L1, L2, and L3 caches store frequently used data. Cache memory reduces latency when accessing main memory (RAM).

- Branch Prediction & Speculative Execution – These mechanisms anticipate the flow of program execution to minimize delays and improve processing speed.

- Memory Management & Interconnects – Efficient data flow between CPU cores, caches, and memory. The memory management improves overall system performance.

CPU microarchitecture differs from Instruction Set Architecture (ISA). CPU microarchitecture defines the commands a processor can execute (x86, ARM, RISC-V). ISA provides the blueprint for instruction. Microarchitecture determines how these instructions are executed within the CPU.

Why Microarchitecture Matters in Modern Computing

The evolution of microarchitecture is the driving force behind the rapid improvements in computing power, energy efficiency, and security. Let us find the reason, why it plays a crucial role:

-

Performance Enhancement

- The efficiency of a CPU’s microarchitecture directly impacts its processing speed and computational power.

- Innovations like out-of-order execution, simultaneous multithreading (SMT), and instruction-level parallelism enable faster task execution.

- Example: Intel’s Hyper-Threading and AMD’s Simultaneous Multithreading (SMT) allow a single physical core to handle multiple threads. That is boosting multitasking capabilities.

-

Power Efficiency & Thermal Management

- There is a growing demand for mobile devices and energy-efficient data centers. Therefore, power optimization is a key factor in CPU design.

- Techniques like dynamic voltage scaling (DVS), power gating, and clock gating help reduce power consumption without compromising performance.

- Example: ARM processors are designed with big.LITTLE architecture. Within it, power-hungry cores handle heavy tasks and energy-efficient cores manage lighter workloads.

-

Scalability & Parallel Processing

- Modern workloads like AI computations and high-performance gaming require multiple CPU cores working simultaneously.

- Microarchitectures now integrate multi-core and chiplet designs. Microarchitectures allow CPUs to scale efficiently for high-performance computing.

- Example: AMD’s chiplet-based design (Zen architecture) enhances scalability and efficiency compared to traditional monolithic CPUs.

-

Security & Data Protection

- Security vulnerabilities like Spectre, Meltdown, and side-channel attacks have exposed flaws in older microarchitectures.

- Newer designs incorporate hardware-based security measures to prevent data leaks and unauthorized access.

- Example: Intel’s Control-Flow Enforcement Technology (CET) and AMD’s Shadow Stack Protection enhance CPU security.

-

Adaptability to AI & Machine Learning

- AI workloads require specialized processing capabilities beyond traditional CPUs.

- Modern microarchitectures now integrate dedicated AI acceleration units and vector processing extensions to improve AI performance.

- Example: Apple’s Neural Engine and Intel’s AI Boost enhance machine learning workloads directly at the chip level.

As computing demands grow, CPU microarchitecture continues to evolve to meet the challenges of performance, efficiency, and security. This blog will explore the latest trends, emerging technologies, and how major manufacturers like Intel, AMD, and ARM are shaping the future of processor design.

A Brief History: From Single-Core to Multi-Core Designs

-

The Era of Single-Core Processors (1970s–Early 2000s)

The earliest CPUs were designed to execute instructions sequentially. That means they could handle only one task at a time. These single-core processors relied on increasing clock speeds (measured in MHz or GHz) to improve performance. The higher the clock speed, the faster the CPU could process instructions.

However, this approach had physical and technological limitations. Those limitations led to the development of multi-core architectures.

Key Milestones in Single-Core Processor Development

| Year | Processor | Key Features & Innovations |

| 1971 | Intel 4004 | First microprocessor (4-bit, 740 kHz), used in calculators. |

| 1978 | Intel 8086 | Introduced the x86 instruction set. X86 formed the foundation for modern processors. |

| 1985 | Intel 80386 | First 32-bit processor. 32-bit processor allowed advanced multitasking in operating systems. |

| 1993 | Intel Pentium | Introduced superscalar execution. Superscalar execution allowed multiple instructions per cycle. |

| 1999 | AMD Athlon | First consumer processor to reach 1 GHz. That set a speed milestone. |

Challenges of Single-Core CPUs

By the early 2000s, simply increasing clock speed became inefficient and unsustainable due to:

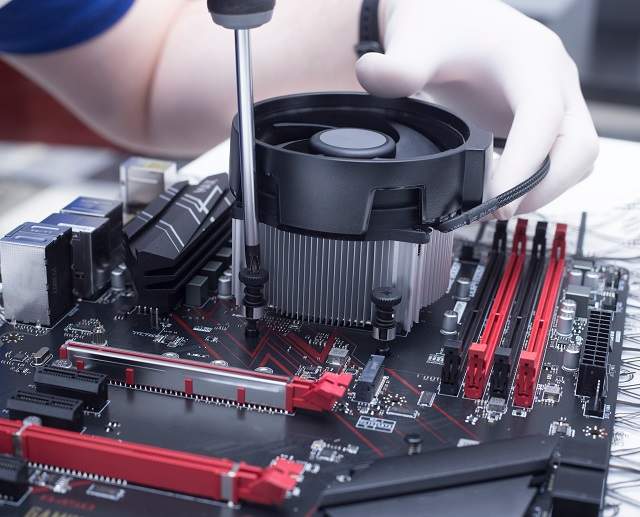

- Heat Dissipation – The faster a CPU runs the more heat it generates. Therefore, that requires complex cooling solutions.

- Power Consumption – Higher clock speeds lead to increased energy use. That is reducing battery life in mobile devices.

- Diminishing Performance Gains – Due to the end of Dennard scaling, increasing clock speeds no longer provided proportional improvements.

The “Power Wall” & The Need for Multi-Core Designs

- The industry hit the “Power Wall”. Power Wall is a point where increasing performance through clock speed alone was impractical.

- Instead of a faster single-core CPU, engineers divided workloads across multiple cores. That introduced multi-core architectures.

-

The Shift to Multi-Core Processors (Mid-2000s – Present)

Instead of relying on a single powerful core, multi-core processors featured multiple processing units. Multiple processing units allow parallel execution of instructions.

Advantages of Multi-Core CPUs:

Better Multitasking – Multiple cores can handle different tasks simultaneously. That is reducing system slowdowns.

Improved Performance Scaling – Multi-core designs efficiently handle multi-threaded applications like video editing, gaming, and data processing.

Lower Power Consumption – Instead of increasing clock speed, tasks are distributed among cores. Even distribution of tasks reduces overall energy consumption.

Key Milestones in Multi-Core CPU Development

| Year | Processor | Innovation |

| 2001 | IBM Power4 | First dual-core processor for servers. |

| 2005 | Intel Pentium D | First consumer dual-core processor. It is the marking for the shift from single-core CPUs. |

| 2005 | AMD Athlon 64 X2 | Competed with Intel by introducing dual-core desktop CPUs with better power efficiency. |

| 2007 | Intel Core 2 Quad | First quad-core processor. Quad-core processor is to enhance performance for multi-threaded applications. |

| 2011 | Intel Sandy Bridge & AMD Bulldozer | Introduced 6-core and 8-core consumer CPUs. |

| 2017 | AMD Ryzen | Launched 8-core and 16-core processors for mainstream users. 16- core processors are to boost gaming and productivity. |

| 2021 | Apple M1 & Intel Alder Lake | Heterogeneous multi-core design combining high-performance and efficient cores. |

The Role of Hyper-Threading & Simultaneous Multithreading (SMT)

To further improve efficiency, CPU manufacturers introduced Simultaneous Multithreading (SMT) and Hyper-Threading (HT).

- These technologies allow each physical core to handle two or more threads. SMT and HT effectively double the number of virtual cores.

- Example: A 4-core processor with Hyper-Threading can handle 8 simultaneous threads. That improves multitasking performance.

-

The Rise of Heterogeneous Multi-Core Architectures

With growing demands from AI, gaming, and mobile computing, modern CPUs are moving beyond homogeneous multi-core (where all cores are identical) to heterogeneous architectures. In which, different types of cores specialize in various tasks.

Key Heterogeneous Multi-Core Designs

ARM’s big.LITTLE Architecture (2011)

- Used in smartphones and tablets to balance power efficiency and performance.

- Combines high-performance cores (for intensive tasks) with power-efficient cores (for background processes).

- Example: ARM Cortex-A77 + Cortex-A55 in mobile processors.

Intel Hybrid Architecture (Alder Lake, 2021)

- Uses Performance Cores (P-Cores) for intensive workloads (gaming, rendering).

- Uses Efficiency Cores (E-Cores) for background tasks. E-cores reduce power consumption.

- First introduced in Intel 12th Gen Alder Lake CPUs.

AMD Chiplet-Based Multi-Core Architecture (2017–Present)

- Instead of a monolithic CPU die, AMD divides cores into multiple chiplets for better scalability.

- Example: AMD Ryzen 9 7950X uses two chiplets with 16 cores for high-performance workloads.

-

The Future: Chiplet-Based and AI-Driven Architectures

Upcoming Trends in Multi-Core CPU Design:

Chiplet-Based Architectures – Instead of making monolithic processors, companies like AMD and Intel are using multiple chiplets to improve scalability and manufacturing efficiency.

- Example: AMD’s Zen 4 and Zen 5 architectures utilize chiplet-based design for high-core-count processors.

AI-Optimized CPUs – Modern CPUs are integrating AI acceleration engines to optimize performance dynamically.

- Example: Apple M-series chips and Intel AI Boost use on-chip neural engines for AI workloads.

Quantum & Neuromorphic Computing – Research is exploring quantum processors and neuromorphic computing for the next generation of computing power.

-

The Evolution of CPU Microarchitecture

- From single-core to multi-core processors, CPU microarchitecture has evolved to meet the increasing demands of modern computing.

- Multi-core and heterogeneous architectures have unlocked massive performance improvements. They are enabling better multitasking, power efficiency, and scalability.

- The future of CPU design will continue to focus on chiplet-based architectures and AI-driven optimizations. In addition, they are specialized computing cores to enhance performance for next-generation applications.

As technology advances, we can expect more innovation in CPU microarchitecture. They are shaping the future of cloud computing, gaming, AI, and data centers.

Key Milestones in CPU Microarchitecture Development

The evolution of CPU microarchitecture has been shaped by breakthroughs in transistor technology, instruction set improvements and multi-core processing. This section highlights major milestones that have defined modern CPU design.

-

The Birth of the Microprocessor (1971–1980s)

Intel 4004 (1971) – The First Microprocessor

- 4-bit processor, 740 kHz clock speed, 2,300 transistors

- Designed for calculators. 4-bit processor marked the beginning of microprocessor development.

Intel 8086 (1978) – Birth of the x86 Architecture

- The first 16-bit processor introduced the x86 instruction set. 16-bit processor remains the foundation of modern CPUs.

- Used in IBM PCs, making x86 the dominant architecture for personal computing.

Motorola 68000 (1979) – A Game-Changer for Workstations

- 32-bit internal architecture introduced the 16-bit external bus

- Powered early Macintosh computers, Amiga, and gaming consoles (Sega Genesis).

-

The Rise of Performance-Enhancing Techniques (1980s–1990s)

Intel 80386 (1985) – First Fully 32-bit Processor

- Introduced protected mode. Protected mode enabled multitasking operating systems.

- Provided virtual memory support. Virtual memory support improved software efficiency.

RISC vs. CISC Debate (1980s–1990s)

- Reduced Instruction Set Computing (RISC) (ARM, MIPS, and PowerPC) simplified instructions for higher efficiency.

- Complex Instruction Set Computing (CISC) (x86) optimized complex operations in fewer instructions.

- RISC became dominant in mobile and embedded systems. The CISC remained prevalent in desktop and server CPUs.

Intel Pentium (1993) – Introduction of Superscalar Execution

- Superscalar architecture allowed multiple instructions per clock cycle.

- Marked a shift towards parallelism in CPU microarchitecture.

AMD K6-2 (1998) – 3DNow! Technology

- Introduced SIMD (Single Instruction, Multiple Data) instructions to accelerate multimedia applications.

-

The Multi-Core Revolution & Hyper-Threading (2000s–2010s)

Intel Pentium 4 (2000) – The GHz Race and Hyper-Threading

- Reached 3.8 GHz clock speed. However, it suffered from power and heat issues.

- Hyper-Threading (HT) was introduced to improve efficiency by allowing one core to handle two threads.

AMD Athlon 64 (2003) – First Consumer 64-bit Processor

- Enabled 64-bit computing. 64-bit Processor paved the way for modern OS and software support.

Intel Core 2 Duo (2006) – The Shift to Multi-Core Processors

- First dual-core processor to gain widespread adoption.

- Prioritized power efficiency over high clock speeds. Core 2 Duo processor is the ending of the “GHz race.”

AMD Phenom II X4 (2008) – Consumer Quad-Core CPUs

- Marked the mainstream adoption of quad-core processing.

Intel Nehalem (2008) – Integrated Memory Controller & Turbo Boost

- Integrated memory controllers directly into the CPU. Integrated Memory Controller reduced latency.

- Introduced Turbo Boost. Turbo Boost dynamically adjusts clock speeds for efficiency.

-

Heterogeneous & Chiplet Architectures (2010s–Present)

Intel Sandy Bridge (2011) – On-Die Graphics & AVX Instructions

- Integrated GPU inside the CPU. It reduces the need for discrete graphics in low-power systems.

- Introduced AVX (Advanced Vector Extensions) for high-performance computing.

AMD Ryzen (2017) – Chiplet-Based Multi-Core Design

- Introduced Zen architecture with 8+ cores. It is challenging Intel’s dominance.

- Used chiplet-based design. Chiplet-based design improves scalability and reducing manufacturing costs.

Intel Alder Lake (2021) – Hybrid CPU Architecture

- Combined Performance Cores (P-Cores) and Efficiency Cores (E-Cores). It is optimizing performance and power efficiency.

Apple M1 (2020) & M2 (2022) – ARM-Based Revolution

- Shifted MacBooks from Intel to ARM. ARM offers high performance with low power consumption.

- Unified Memory Architecture (UMA) improved efficiency in AI and GPU-intensive tasks.

AMD Zen 4 (2022) – 5nm Process & 3D V-Cache

- Introduced 3D-stacked cache (V-Cache). V-Cache improves gaming performance.

-

The Future of CPU Microarchitecture

Chiplet-Based and AI-Optimized CPUs

- CPUs will increasingly rely on chiplet architectures to scale core counts efficiently.

- AI-driven microarchitecture designs will optimize performance for machine learning and edge computing.

Quantum & Neuromorphic Computing

- Research is advancing in quantum processors and brain-inspired computing models for next-gen AI applications.

A Continuous Evolution

The journey of CPU microarchitecture reflects the industry’s drive for higher efficiency, parallelism, and power optimization. From the Intel 4004 to modern chiplet-based, AI-enhanced architectures, CPUs continue to evolve to meet the demands of gaming, AI, cloud computing, and high-performance workloads.

The future promises even more groundbreaking advancements in microprocessor design!

Chiplet-Based Architecture: Breaking the Monolithic Design

The Shift from Monolithic CPUs to Chiplets

Traditionally, CPU microarchitecture followed a monolithic design. In which, all processing cores, cache, and controllers were integrated into a single silicon die. However, as manufacturing complexity and costs increased with smaller process nodes (5nm, 3nm), monolithic designs became less scalable and more expensive to produce.

To overcome these challenges, chiplet-based architecture emerged as a revolutionary approach to the CPU design. Instead of relying on a single large die, chiplets divide processor components into smaller, modular units, connected via high-speed interconnects.

Key Advantages of Chiplet-Based Architecture

Scalability:

- Chiplets allow manufacturers to mix and match different core configurations. Chiplets enable custom solutions for high-performance computing, AI, and data centers.

- Easier to scale multi-core processors without yield issues associated with large monolithic dies.

Cost Efficiency:

- Smaller chiplets improve manufacturing yield. They reduce waste and production costs compared to a single large die.

- Enables reuse of existing silicon components. They lower development expenses.

Performance Optimization:

- Different chiplets can be optimized for specific tasks. They can be optimized specifically for high-performance cores, low-power efficiency cores, or dedicated AI accelerators.

- Advanced interconnect technologies (Infinity Fabric, EMIB, Foveros) ensure high-bandwidth, low-latency communication between chiplets.

Power Efficiency:

- Chiplets reduce power leakage and heat dissipation. They enhance overall energy efficiency.

- This is particularly beneficial for data centers, high-performance computing (HPC), and mobile devices.

Pioneers of Chiplet-Based CPU Design

AMD Ryzen & EPYC (2017–Present)

- AMD’s Zen architecture (starting with Ryzen 3000) was among the first to implement chiplet-based CPUs for mainstream users.

- Infinity Fabric was introduced to connect multiple CPU chiplets. Infinity Fabric ensures high-speed communication.

- EPYC processors leveraged multi-chip modules (MCM) to scale up to 96 cores (Zen 4).

Intel Alder Lake & Sapphire Rapids (2021–Present)

- Intel adopted a hybrid chiplet approach. Hybrid chiplet approach combines Performance (P) and Efficiency (E) cores to optimize workloads.

- Foveros 3D stacking technology enables high-performance CPU layering.

- Sapphire Rapids Xeon CPUs use a modular tile-based approach for data center workloads.

Apple M1 & M2 (2020–Present)

- Apple’s ARM-based SoCs integrate CPU, GPU, and memory controllers into a unified architecture. Unified architecture improves power efficiency and performance.

- While not traditional chiplets, Apple’s approach to modular silicon mirrors the benefits of chiplet integration.

NVIDIA & Custom AI Processors

- NVIDIA is exploring chiplet-based GPUs and AI accelerators to optimize deep learning performance.

- Future AI-focused chips will integrate chiplets dedicated to tensor processing, inference, and real-time data analytics.

Challenges of Chiplet-Based Architecture

Interconnect Bottlenecks

- Efficient high-speed communication between chiplets requires advanced interconnect technologies (AMD’s Infinity Fabric, and Intel’s EMIB).

- Latency issues can arise if the inter-chip bandwidth is not optimized.

Software & Workload Optimization

- Software and OS schedulers must effectively manage workloads across different chiplets to prevent bottlenecks.

- Workload distribution must be fine-tuned for hybrid architectures like Intel’s Alder Lake (P-Cores & E-Cores).

Manufacturing Complexity

- Chiplets improve yield and cost. However, integrating multiple dies into a single package requires precise engineering and advanced packaging techniques.

The Future of Chiplet-Based CPUs

3D Chiplet Stacking & Advanced Packaging

- 3D-stacked chiplets will further improve bandwidth and power efficiency (AMD’s 3D V-Cache).

- Hybrid bonding will allow denser connections between different processing units.

AI & Heterogeneous Computing

- Chiplets dedicated to AI acceleration, encryption, and real-time processing will become more prevalent.

- Future designs may incorporate ARM cores, RISC-V accelerators, and specialized AI engines within a chiplet-based CPU.

Mainstream Adoption in Consumer Devices

- Currently, chiplet architectures are dominant in high-performance computing (HPC) and data centers.

- Future desktop and mobile CPUs will fully transition to modular chiplet design. Modular chiplet design is improving battery life and performance.

A Paradigm Shift in CPU Design

Chiplet-based architecture represents a fundamental shift in how modern processors are designed and manufactured. Chiplet-based architecture breaks away from monolithic constraints. Chiplets offer scalability, cost-effectiveness, and power efficiency. Chiplet paves the way for next-generation computing in AI, gaming, cloud, and mobile devices.

The future of CPU microarchitecture is chiplet-driven. It is unlocking limitless possibilities for innovation!

Power Efficiency & Performance Per Watt Improvements

The Growing Demand for Energy-Efficient Computing

With increasing computational demands, the focus has shifted from raw performance to efficiency. User prefers the best in delivering the best performance per watt. Whether it is high-performance computing (HPC), gaming, AI workloads, or mobile devices, optimizing power usage has become a crucial aspect of CPU microarchitecture.

Modern CPU architectures now integrate smaller transistors, hybrid cores, and AI-and-powered power management. Further, the advanced packaging techniques ensure higher performance at lower energy costs.

Key Innovations & Industry Trends in Energy-Efficient CPU Design

1️⃣ Advanced Process Nodes: The Foundation of Power Efficiency

Moving to smaller nanometer (nm) process nodes has allowed manufacturers to pack more transistors while reducing power consumption.

Industry Trends & Notable CPUs

- Intel Core Ultra 9 (Meteor Lake, Intel 4 Process) – First Intel processor with a disaggregated architecture. Intel Core Ultra 9 features low-power efficiency cores for background tasks.

- AMD Ryzen 9 7950X3D (TSMC 5nm + 3D V-Cache) – Uses stacked L3 cache to improve performance while lowering power draw.

- Apple M3 Pro & M3 Max (TSMC 3nm) – First consumer CPUs built on 3nm technology. M3 Pro is significantly improving performance per watt.

Impact:

- More power-efficient CPUs mean less heat generation and lower cooling requirements.

- Laptops, smartphones, and cloud servers benefit from extended battery life and reduced power costs.

2️⃣ Big.LITTLE & Hybrid Core Architectures: Smarter Power Management

Instead of using only high-performance cores, CPU makers are integrating performance cores (P-cores) and efficiency cores (E-cores). This integration optimizes workload distribution.

Industry Trends & Notable CPUs

- Intel Alder Lake & Raptor Lake (12th, 13th Gen) – First x86 chips to introduce P-cores and E-cores. Introduction of P and E-cores improved power efficiency.

- ARM Cortex-X4 + Cortex-A720 + Cortex-A520 (Snapdragon & Exynos Mobile Chips) – Uses hybrid cores to extend smartphone battery life.

- Apple M2 & M3 Chips – ARM-based chips come with ultra-low-power cores. They are delivering 20+ hours of battery life.

Impact:

- Laptops and smartphones can now handle background tasks using low-power cores. That is reducing unnecessary energy usage.

- Data centers are moving toward ARM-based hybrid architectures. They are cutting operational costs by reducing power-hungry workloads.

3️⃣ Dynamic Voltage & Frequency Scaling (DVFS) for Smart Energy Use

Modern CPUs use dynamic power scaling to adjust clock speeds and voltages based on workload intensity.

Industry Trends & Notable CPUs

- AMD Precision Boost 2 & Intel Turbo Boost Max 3.0 – Automatically increases CPU clock speeds when needed. It reduces power draw at idle.

- ARM DynamIQ Technology – Fine-tunes voltage scaling for mobile processors. Fine-tuning voltage scaling ensures efficient performance scaling.

- Apple’s Neural Engine Power Management – It uses AI to distribute power across CPU, GPU, and machine learning workloads dynamically.

Impact:

- AI-driven power scaling reduces energy waste. Power scaling enables cooler laptops and servers.

- Gamers benefit from high performance when needed. Further, the idle power usage remains minimal.

4️⃣ 3D Stacking & Advanced Packaging: Power Efficiency at the Next Level

New CPU packaging techniques enhance power efficiency and interconnect bandwidth while keeping power draw low.

Industry Trends & Notable CPUs

- AMD 3D V-Cache (Ryzen 7800X3D, 7950X3D) – Stacks extra L3 cache on top of the CPU to reduce memory access power consumption.

- Intel Foveros 3D Stacking (Meteor Lake) – Uses a tile-based architecture. Tile-based architecture allows different components to be optimized separately for power efficiency.

- TSMC CoWos (Chip-on-Wafer) – Advanced interconnects improve power efficiency in AI & HPC processors like NVIDIA Grace Hopper.

Impact:

- Gaming CPUs now consume less power while maintaining high frame rates.

- Data centers can optimize workloads with lower power requirements. That is reducing operational costs.

5️⃣ AI-Assisted Power Optimization: Smarter Energy Management

AI and machine learning are now used to predict and adjust power usage dynamically based on workload behavior.

Industry Trends & Notable CPUs

- Intel’s AI-assisted Power Tuning (Meteor Lake) – Uses AI models to optimize performance per watt in real-time.

- NVIDIA Hopper AI Accelerators – Feature AI-driven power scaling for deep learning workloads. That is maximizing efficiency.

- Apple M3 Neural Engine – Automatically distributes power across CPU, GPU, and AI tasks. It is extending battery life.

Impact:

- AI-powered power tuning improves real-time workload efficiency. It is making AI and ML applications more energy-efficient.

- Laptops and mobile devices last longer on a single charge. Whereas in data centers they reduce carbon footprints.

How Power Efficiency is Reshaping Computing

Sustainable Data Centers & HPC

- Companies like Google, AWS, and Microsoft are adopting energy-efficient ARM-based processors to cut electricity costs.

- AI-based cooling systems improve efficiency. AI-based cooling system is reducing data center power usage by up to 40%.

Longer Battery Life in Consumer Devices

- ARM-based chips (Apple M-series, Qualcomm Snapdragon X Elite) now offer 20+ hours of battery life in laptops.

- Efficient GPU-accelerated AI processing ensures mobile devices run AI tasks without excessive battery drain.

AI & Edge Computing with Lower Power Requirements

- AI inference workloads are moving toward low-power neural processing units (NPUs).

- Efficient AI accelerators allow autonomous vehicles, IoT, and robotics to operate on battery-powered systems.

Real-World Use Cases of Power-Efficient CPUs

1. Data Centers: Reducing Power Consumption & Carbon Footprint

- Cloud providers like Google, AWS, and Microsoft are shifting to ARM-based chips (Graviton, Ampere Altra) to cut power usage by 40% compared to x86 CPUs.

- AI-based cooling reduces energy waste. AI-based cooling system is lowering operational costs and environmental impact.

2. Laptops & Mobile Devices: Maximizing Battery Life

- Apple’s M-series chips (M2, M3) deliver 20+ hours of battery life. Thanks to low-power efficiency cores and optimized memory management.

- Qualcomm Snapdragon X Elite brings AI-driven power scaling. AI-driven power scaling is balancing performance and battery life in Windows laptops.

3. Gaming & Workstations: High Performance, Low Power

- AMD’s Ryzen 7800X3D with 3D V-Cache uses lower voltage while maintaining top-tier gaming performance.

- Intel’s 14th Gen chips use efficiency cores to manage background processes. It is freeing up power for demanding tasks.

4. AI, IoT, and Automotive Applications

- Tesla’s Full Self-Driving (FSD) chip optimizes power usage for real-time AI inference in cars.

- NVIDIA Jetson Orin enables edge AI processing on low power. That is perfect for drones, robotics, and smart cameras.

Power Efficiency Comparison of Modern CPUs (Performance Per Watt)

| Processor | Process Node | Power Efficiency Features | TDP (Wattage) | Performance Per Watt Improvement |

| Apple M3 Max | TSMC 3nm | Hybrid cores, AI power tuning | 36W | +40% vs M2 Max |

| Intel Core Ultra 9 (Meteor Lake) | Intel 4nm | Disaggregated chiplets, AI tuning | 45W | +25% vs Raptor Lake |

| AMD Ryzen 9 7950X3D | TSMC 5nm | 3D V-Cache, optimized power scaling | 120W | +30% vs 7950X |

| NVIDIA Grace Hopper | TSMC 4nm | AI-driven power scaling, CoWoS | 300W | +40% vs Ampere |

| Qualcomm Snapdragon X Elite | TSMC 4nm | ARM-based, AI power management | 15W | +45% vs Intel x86 mobile chips |

Key Takeaways:

Apple & Qualcomm dominate ultra-efficient computing with ARM-based low-power architectures.

AMD’s 3D V-Cache improves gaming power efficiency without increasing wattage.

Intel & NVIDIA leverage AI-driven power management for better efficiency in AI & HPC workloads.

The Future of Performance Per Watt

Power-efficient computing is no longer just about battery life. However, it now defines the next generation of CPUs. With hybrid cores, AI-based tuning, and energy-efficient process nodes, the industry is moving toward sustainable, high-performance computing.

Inference: Performance Per Watt is the Future of Computing

The evolution of CPU microarchitecture is now focused on efficiency-first design. The transistor scaling approaches physical limits. Therefore, manufacturers are innovating through hybrid cores, 3D stacking, AI-driven power management, and energy-efficient process nodes.

Intel, AMD, Apple, ARM, and NVIDIA are all prioritizing power-efficient designs to enable longer-lasting devices, sustainable data centers, and AI-driven workloads.

In the future, CPUs will not just be measured by speed but by how efficiently they use every watt of power.

Hybrid Core Architecture (Big.LITTLE, Intel P-Cores & E-Cores)

The Shift toward Heterogeneous Architectures

Traditional CPU designs relied on homogeneous cores. In which all cores had the same performance and power characteristics. However, as computing demands increased, a more energy-efficient and workload-optimized approach became necessary. In hybrid core architecture, different types of cores handle different tasks to maximize performance while minimizing power consumption.

Hybrid architectures like ARM’s big.LITTLE and Intel’s Performance (P) and Efficiency (E) cores are now standard in modern CPUs. They are balancing high-performance workloads with power efficiency.

How Hybrid Core Architecture Works

Big Cores (Performance-Oriented)

- Designed for high-performance tasks like gaming, video editing, and AI workloads.

- Consume more power but deliver higher single-thread performance.

- Found in Intel’s P-Cores (Performance Cores) and ARM’s big cores.

Little Cores (Power-Efficient, Background Tasks)

- Handle lightweight tasks like background updates, browsing, and system maintenance.

- Consume less power. That allows for longer battery life in mobile devices.

- Found in Intel’s E-Cores (Efficiency Cores) and ARM’s LITTLE cores.

Smart Scheduling: Modern CPUs use AI-driven task scheduling to allocate workloads dynamically. Smart Scheduling ensures that the right core handles the right task at the right time.

Intel’s Hybrid Core Design: P-Cores & E-Cores

Performance Cores (P-Cores)

Optimized for high clock speeds & single-threaded performance.

Ideal for gaming, rendering, and high-load applications.

Found in Intel’s Alder Lake, Raptor Lake, and Meteor Lake CPUs.

Efficiency Cores (E-Cores)

Consume less power, reducing overall heat output.

Designed for background tasks & multi-threaded workloads.

Improve multi-core efficiency without increasing power draw.

Intel Thread Director: A built-in AI-powered scheduler dynamically assigns tasks to P-Cores and E-Cores for optimal performance.

Example:

- Gaming + Streaming: P-Cores handle the game. E-Cores manage streaming & background tasks.

- Video Rendering: E-Cores assist in multi-threaded workloads. That is speeding up render times without overheating.

ARM’s big.LITTLE & DynamIQ: Leading Mobile Efficiency

ARM pioneered big.LITTLE architecture later evolved into DynamIQ. It enables finer-grained control over workloads.

big Cores: High-performance tasks (gaming, AI, heavy multitasking).

LITTLE Cores: Background processing (emails, notifications, standby mode).

DynamIQ: Allows mixing different core types in clusters. That improves power efficiency further.

Example:

- Smartphones & Tablets (Apple, Qualcomm, Samsung) use ARM’s Cortex-X & Cortex-A cores to balance performance & battery life.

- Apple’s M-Series chips leverage hybrid core designs to outperform Intel in efficiency.

Real-World Benefits of Hybrid Core Architectures

1. Laptops & Mobile Devices: Longer Battery Life & Performance Gains

- Apple’s M3 Max and Qualcomm’s Snapdragon X Elite offer ARM-based hybrid cores. They are significantly improving power efficiency.

2. Gaming: Better Multitasking & Background Process Handling

- Intel’s 14th Gen Core CPUs use E-Cores for background processes. That is keeping game frame rates stable.

3. AI & Cloud Computing: Optimized Workload Distribution

- Google’s Tensor Processing Units (TPUs) and NVIDIA’s Grace Hopper CPUs leverage hybrid core designs for efficient AI model training.

The Future of Hybrid CPU Designs

Hybrid core architectures have redefined how processors handle workloads. That is blending high performance with energy efficiency. Whether in PCs, gaming consoles, smartphones, or AI workloads, heterogeneous computing is the future of CPU design.

Hybrid Core Performance Comparison (Intel vs. Apple vs. ARM)

| Processor | Architecture | P-Cores / E-Cores | TDP (Wattage) | Performance Per Watt | Key Strengths |

| Intel Core i9-14900K (14th Gen) | Hybrid (P/E Cores) | 8 P-Cores / 16 E-Cores | 125W (Base)

253W (Turbo) |

Good | High single-thread performance for gaming & productivity |

| Apple M3 Max | ARM big.LITTLE (Efficiency-first) | 12 P-Cores / 4 E-Cores | 36W (Max Load) | Excellent | Extreme efficiency, ideal for MacBooks & creative workloads |

| AMD Ryzen 9 7950X3D (3D V-Cache) | Traditional Multi-Core | 16 Performance Cores | 120W | Moderate | Best for gaming & cache-intensive tasks |

| Qualcomm Snapdragon X Elite | ARM big.LITTLE | 12 Hybrid Cores | 15W | Exceptional | Best battery life for Windows laptops, AI-optimized |

| Apple A17 Pro (iPhone 15 Pro) | ARM big.LITTLE | 2 P-Cores / 4 E-Cores | 5W (Est.) | Industry-Leading | Efficient mobile performance with low power draw |

Key Takeaways:

Apple’s ARM chips (M3, A17 Pro) lead in performance per watt with industry-best power efficiency.

Intel’s hybrid P/E core approach maximizes performance. However, it comes at higher power consumption.

AMD’s 3D V-Cache is great for gaming. However, it lacks dedicated efficiency cores.

Qualcomm’s Snapdragon X Elite is the future for ultra-efficient Windows laptops.

AI-Driven Optimization in CPU Design

The Role of AI in Modern CPU Design

Computing demands are continuously on the rise. Therefore, traditional methods of improving CPU performance— like increasing clock speeds or adding more cores—are reaching their limits. This has led to the integration of artificial intelligence (AI) and machine learning (ML) into CPU design. Their integration enables smarter performance tuning, power efficiency, and workload distribution.

AI-driven optimization is now a core element in CPU architecture. AI-driven optimization influences everything from real-time task scheduling to predictive performance enhancements.

How AI is Revolutionizing CPU Performance

1️⃣ Intelligent Task Scheduling & Workload Optimization

Traditional Approach: CPU task scheduling was handled by static algorithms. That leads to inefficiencies in power usage and performance.

AI-Driven Approach: AI-powered schedulers analyze real-time workloads and dynamically allocate tasks to optimize speed and energy consumption.

Example:

- Intel Thread Director (introduced with Alder Lake) uses AI to assign workloads between Performance (P) Cores and Efficiency (E) Cores. Thread Director improves multi-tasking and power savings.

2️⃣ AI-Assisted Power Management for Efficiency Gains

Power consumption is one of the biggest challenges in CPU design. AI helps by predicting workloads and adjusting power consumption dynamically.

Example:

- Apple’s M-series chips use AI-powered Dynamic Voltage and Frequency Scaling (DVFS) to optimize power usage. This maximizes battery life on MacBooks.

- AMD’s Ryzen AI enhances adaptive power tuning. Adaptive power tuning ensures CPUs run at optimal performance per watt.

3️⃣ AI in Predictive Performance Enhancement

CPUs now use machine learning models to predict upcoming workloads and adjust performance accordingly.

Example:

- ARM’s Cortex-X cores leverage AI-powered predictive branch prediction. AI performance enhancement reduces latency in complex computing tasks.

- NVIDIA’s Grace CPU uses deep learning acceleration to optimize AI and cloud workloads.

4️⃣ AI-Optimized Cache & Memory Management

AI plays a crucial role in managing cache memory efficiently. AI-Optimized Cache & Memory Management reduces bottlenecks in data retrieval.

Example:

- AMD’s Smart Access Memory (SAM) dynamically optimizes memory bandwidth using AI-driven techniques. SAM boosts gaming and workstation performance.

The Future of AI in CPU Microarchitecture

Fully AI-Optimized CPUs: Future chips may feature dedicated AI cores that learn and adapt to a user’s computing habits.

Neural Processing Units (NPUs): CPUs will increasingly integrate NPUs to accelerate AI tasks without relying on GPUs.

AI-Driven Security Features: AI will help detect and mitigate real-time cyber threats at the CPU level.

AI as the Future of CPU Design

AI-driven optimization is redefining CPU microarchitecture. That is leading to smarter, faster, and more power-efficient processors. As AI continues to evolve, we can expect greater automation, efficiency, and intelligent computing in everyday devices.

Advancements in Memory Hierarchy & Cache Management

The Importance of Memory Hierarchy in CPU Performance

Memory hierarchy plays a critical role in modern CPU microarchitecture. It ensures fast data access while maintaining power efficiency. With increasing computational demands, innovations in L1/L2/L3 cache management, DDR5, and High Bandwidth Memory (HBM) have drastically improved overall latency, bandwidth, and performance per watt.

1️⃣ Evolution of CPU Cache: L1, L2, and L3 Improvements

Cache memory sits between the CPU and RAM. CPU Cache is storing frequently accessed data to minimize delays. The latest advancements focus on larger, faster, and more efficient cache structures.

L1 Cache (First-Level Cache) Enhancements

- The L1 cache remains small but extremely fast. L1 cache has latencies in nanoseconds (ns).

- Recent CPUs have increased L1 associativity. L1 Associativity improves hit rates.

- Example: AMD Zen 4 and Intel 14th Gen CPUs utilize better branch prediction algorithms to maximize L1 cache efficiency.

L2 Cache: Striking a Balance between Speed & Size

- L2 cache has grown larger in modern architectures (Intel & AMD now use 2MB to 4MB per core).

- Smarter cache prefetching techniques reduce memory stalls. L2 cache improves performance in gaming and multi-threaded workloads.

L3 Cache: Boosting Performance for Complex Tasks

- L3 cache now serves as a shared high-speed buffer for all cores.

- AMD’s 3D V-Cache technology significantly increases L3 cache size (up to 128MB). That reduces latency in gaming and AI-driven tasks.

- Intel’s Adaptive LLC (Last-Level Cache) optimizes L3 cache for power efficiency and task-specific workloads.

Real-World Impact:

- More cache memory = fewer RAM accesses = faster computing performance.

- Gaming & AI workloads benefit immensely from larger L3 caches (AMD Ryzen 7 7800X3D).

2️⃣ DDR5: The Next Generation of System Memory

DDR5 memory offers higher speeds, increased bandwidth, and better power efficiency compared to DDR4.

Key DDR5 Advancements:

Higher Speeds: Starts at 4800 MT/s (mega-transfers per second). DDR5 can reach over 8000 MT/s in high-end setups.

Better Power Efficiency: DDR5 operates at 1.1V (compared to DDR4’s 1.2V), reducing power consumption.

Double the Capacity: DDR5 banks & burst lengths are doubled. That allows more efficient data transfer per cycle.

Example: Intel’s 14th Gen and AMD’s Ryzen 7000 series fully support DDR5. It unlocks faster memory-intensive computing.

Future Outlook: DDR5 is becoming mainstream. Now, DDR4 support is fading in newer chipsets.

3️⃣ High Bandwidth Memory (HBM): The Future of High-Performance Computing

HBM (High Bandwidth Memory) is an ultra-fast stacked DRAM designed for AI, GPUs, and high-performance computing (HPC).

HBM vs. Traditional DDR Memory:

| Feature | HBM (High Bandwidth Memory) | DDR5 |

| Bandwidth | 1TB/s+ (Extremely High) | 38-80 GB/s |

| Power Efficiency | Lower Power Draw Per GB | Higher power usage |

| Use Case | AI, HPC, GPUs | General-purpose computing |

Real-World Use Cases:

- AMD Instinct MI300X and NVIDIA H100 AI GPUs leverage HBM3 for AI workloads, deep learning, and data centers.

- Future desktop CPUs may integrate HBM as an L4 cache to enhance performance without increasing power draw.

Smarter, Faster, and More Efficient Memory Systems

Memory hierarchy advancements optimize CPU performance. It reduces latency, increases bandwidth, and enhances power efficiency. With larger L3 caches, DDR5 adoption, and HBM integration, the future of high-speed computing looks brighter than ever.

DDR5 vs. LPDDR5X: Key Differences & Use Cases

With the rapid evolution of memory technologies, both DDR5 and LPDDR5X are leading innovations in different computing segments. DDR5 is designed for high-performance desktops, servers, and gaming PCs. LPDDR5X is optimized for mobile devices, ultrabooks, and energy-efficient computing.

1️⃣ What Is DDR5?

DDR5 (Double Data Rate 5) is the latest generation of desktop and server RAM. It offers higher speeds, greater bandwidth, and improved power efficiency compared to DDR4.

Key Features of DDR5:

Speeds: Starts at 4800 MT/s and can exceed 8000 MT/s with overclocking.

Bandwidth: Higher data transfer rates improve performance in gaming, AI, and data-intensive applications.

Power Efficiency: Runs at 1.1V (lower than DDR4’s 1.2V). Thereby, it reduces overall power consumption.

Capacity: Supports up to 256GB per DIMM. It is useful for servers and workstations.

Best For:

- High-performance desktops, gaming PCs, and workstations

- Servers handling AI, machine learning, and data processing

- Overclocking enthusiasts looking for maximum speed

2️⃣ What is LPDDR5X?

LPDDR5X (Low Power DDR5X) is an ultra-efficient memory designed for smartphones, tablets, ultrabooks, and AI-driven edge computing. It is a successor to LPDDR5. It offers even faster speeds and lower power consumption.

Key Features of LPDDR5X:

Speeds: Ranges from 6400 MT/s to 8533 MT/s. It is surpassing standard DDR5 speeds in some cases.

Power Efficiency: Operates at 0.5V – 0.9V. That is significantly reducing battery drain.

Bandwidth: Optimized for fast, real-time data processing. It improves performance in AI-driven mobile tasks.

Integration: Soldered directly onto the motherboard for compact, power-efficient designs.

Best For:

- Smartphones, tablets, and ultrabooks

- Energy-efficient laptops with long battery life

- AI-driven applications in mobile and IoT devices

3️⃣ DDR5 vs. LPDDR5X: Head-to-Head Comparison

| Feature | DDR5 (Desktop & Server) | LPDDR5X (Mobile & Ultrabooks) |

| Speed | 4800 – 8400 MT/s | 6400 – 8533 MT/s |

| Power Usage | 1.1V (lower than DDR4) | 0.5V – 0.9V (super-efficient) |

| Bandwidth | High for gaming, AI, and data processing | Optimized for mobile AI and real-time processing |

| Capacity | Up to 256GB per module | Integrated with SoC, max 64GB per system |

| Best For | Gaming PCs, workstations, servers | Smartphones, tablets, ultrabooks |

4️⃣ Choosing the Right Memory: DDR5 vs. LPDDR5X

➡️ Choose DDR5 If You Need:

Extreme performance for gaming, AI, or data-intensive workloads

Expandable RAM for future upgrades

Overclocking capabilities for maximum speed

➡️ Choose LPDDR5X If You Need:

Energy-efficient memory for battery-powered devices

Fast AI-driven real-time performance on mobile devices

Compact, soldered memory that enhances portability

5️⃣ The Future of DDR5 & LPDDR5X

DDR5 adoption is increasing. It is with major platforms like Intel 14th Gen and AMD Ryzen 7000 series fully embracing it.

LPDDR5X is becoming the standard for flagship smartphones, AI-powered ultrabooks, and IoT devices.

Future mobile chips (Apple M3, Snapdragon X Elite, and Intel Lunar Lake) are expected to leverage LPDDR5X for AI acceleration.

Final Verdict: While DDR5 dominates the PC and server space, LPDDR5X leads in mobile and low-power computing. Both will coexist as memory needs to diversify across different computing platforms.

Security Enhancements: Protecting Against Modern Threats

With the increasing complexity of CPU microarchitectures, security threats like Spectre, Meltdown, and side-channel attacks have exposed vulnerabilities in speculative execution and caching mechanisms. Modern CPUs now integrate advanced security features to mitigate these risks without significantly compromising performance.

1️⃣ Understanding CPU Security Vulnerabilities

Spectre – Exploits speculative execution to leak sensitive data across process boundaries.

Meltdown – Allows unauthorized access to privileged kernel memory. Meltdown vulnerability can affect systems with out-of-order execution.

Side-Channel Attacks – Exploit timing differences, power consumption, and electromagnetic emissions to extract sensitive information.

These vulnerabilities exposed critical flaws in branch prediction, speculative execution, and cache timing. Branch prediction, speculative execution, and cache timing are the fundamental mechanisms that improve CPU performance.

2️⃣ Key Security Enhancements in Modern CPUs

Hardware-Based Mitigations

Microcode Updates & Patch-Level Fixes – CPU vendors (Intel, AMD, and ARM) rolled out microcode updates to mitigate Spectre and Meltdown via firmware patches.

Speculative Execution Guards – Technologies like Retpoline (Return Trampoline) prevent branch prediction attacks by rerouting indirect branch execution.

Memory Tagging Extensions (MTE) – Found in ARM architectures. MTE helps prevent buffer overflows and memory corruption exploits.

Control Flow Integrity (CFI) – It enforces execution path verification. CFI reduces the risk of Return-Oriented Programming (ROP) attacks.

Secure Execution Environments

Intel SGX (Software Guard Extensions) – Creates isolated enclaves for secure processing of sensitive workloads (encryption keys, financial transactions).

AMD SEV (Secure Encrypted Virtualization) – Encrypts virtual machines (VMs), ensuring that even cloud providers can not access the data.

ARM TrustZone – Provides a separate secure execution environment for sensitive computations in mobile and IoT devices.

Cache & Memory Protection

L1 Terminal Fault (L1TF) Fixes – Prevents unauthorized access to L1 cache data. It mitigates speculative execution attacks.

Branch Target Injection Mitigations – Reduces the impact of Spectre v2 by improving branch prediction security.

Encrypted Memory – CPUs like AMD Ryzen and Intel Xeon support memory encryption (AMD SME, Intel TME) to safeguard against cold boot attacks.

3️⃣ The Future of CPU Security

Post-Spectre CPUs are designed with security-first architectures. Security-first architectures focus on better isolation, real-time anomaly detection, and AI-driven threat analysis.

Confidential Computing (Google’s Titan M, Microsoft Pluton) will further enhance hardware security in cloud and enterprise environments.

Modern processors continue to balance performance and security. The next-generation microarchitectures will integrate zero-trust principles, AI-based threat detection, and hardware root-of-trust mechanisms.

AI-Driven Security in Modern CPUs: Enhancing Protection against Evolving Threats

As cyber threats grow more sophisticated, traditional security mechanisms in CPUs are struggling to keep up. To counter this, chip manufacturers are integrating Artificial Intelligence (AI) and Machine Learning (ML) into security frameworks. They are enabling real-time threat detection, anomaly prevention, and adaptive defenses.

1️⃣ How AI Enhances CPU Security

Real-Time Threat Detection & Prevention

AI can analyze millions of instructions per second. AI identifies irregular execution patterns that indicate potential attacks.

AI-Based Behavioral Analysis: Monitors CPU instruction flow to detect malicious patterns (Spectre-like speculative execution anomalies).

Dynamic Attack Response: AI-driven firmware updates automatically patch zero-day vulnerabilities before widespread exploitation.

Hardware-Accelerated Malware Detection: Some CPUs now include dedicated AI cores to scan for known and emerging malware signatures at the silicon level.

2️⃣ AI-Integrated CPU Security Features

️ Intel Threat Detection Technology (TDT)

Uses ML algorithms to detect fileless malware and ransomware attacks by analyzing CPU behavior in real-time.

Works in tandem with hardware-based telemetry to flag suspicious processes before they execute.

AMD Shadow Stack & AI-Enhanced Exploit Prevention

Prevents Return-Oriented Programming (ROP) attacks. It uses AI to validate execution paths and block unauthorized code execution.

AI-assisted Control Flow Integrity (CFI) ensures safe application execution without performance degradation.

ARM AI Security Co-Processors

Dedicated AI-driven security modules in ARM TrustZone for edge AI, IoT, and mobile devices.

Uses on-chip ML models to detect power anomalies, memory injections, and side-channel attacks.

3️⃣ AI & Zero-Trust Security in Future CPU Designs

Autonomous Threat Defense

AI-powered security systems will proactively block threats before execution. That reduces reliance on software-based antivirus solutions.

AI-Enhanced Microcode Updates

Instead of waiting for vendor patches, self-learning CPUs could autonomously reconfigure their microcode to defend against newly discovered vulnerabilities.

Secure Multi-Tenant Environments

AI-driven security will play a critical role in confidential computing. It ensures data protection in multi-cloud and hybrid environments.

AI as the Future of CPU Security

AI’s integration into CPU security is not just an upgrade—it is a necessity in the age of advanced cyber threats. With real-time monitoring, predictive threat intelligence, and automated security responses, AI is revolutionizing how modern processors defend against attacks.

Intel’s Latest Innovations in Microarchitecture: Alder Lake, Raptor Lake & Beyond

Intel continues to push the boundaries of CPU microarchitecture with hybrid core designs, AI-powered optimizations, and advanced power efficiency techniques. The latest Alder Lake (12th Gen) and Raptor Lake (13th Gen) processors, along with upcoming Meteor Lake and Arrow Lake architectures, showcase Intel’s commitment to innovation.

1️⃣ Alder Lake (12th Gen): The Birth of Hybrid Core Architecture

Hybrid Architecture (P-Cores & E-Cores)

Intel introduced Performance (P) cores for demanding tasks and Efficiency (E) cores for background workloads. This improves multitasking and power efficiency.

Intel Thread Director

AI-based scheduling dynamically assigns workloads to the appropriate core type. It optimizes performance per watt.

DDR5 & PCIe 5.0 Support

Alder Lake CPUs were the first to support DDR5 memory and PCIe 5.0. That enhances data bandwidth for gaming and AI applications.

2️⃣ Raptor Lake (13th Gen): Refinements & Higher Clock Speeds

Increased Core Counts – More E-cores were added for better parallel computing and multitasking.

Higher Boost Clocks – P-core frequencies exceeded 5.8 GHz. Boost Clock provides a performance edge in gaming and creative workloads.

Better Cache Optimization – Intel doubled the L2 cache size. Cache Optimization improves latency-sensitive applications.

3️⃣ Meteor Lake (14th Gen, 2024): Intel’s Move to Chiplets

Tile-Based Chiplet Architecture – Instead of a monolithic die, Meteor Lake uses separate compute, graphics, and I/O tiles connected via Foveros 3D stacking.

Intel 4 Process Node – It uses EUV lithography. EUV lithography improves power efficiency by up to 20%.

AI Acceleration – Introduces Intel AI Boost for AI-based performance tuning and machine learning workloads.

4️⃣ Arrow Lake (15th Gen, 2025): Next-Level Performance

Redesigned Compute Cores – Uses Lion Cove P-cores & Skymont E-cores for increased IPC (Instructions Per Cycle).

Enhanced Graphics Performance – Integrated Xe-LPG graphics for better gaming & GPU-accelerated tasks.

Lower Power Consumption – Leverages Intel 20A (Ångström Era) process node. It incorporates RibbonFET and PowerVia technologies.

5️⃣ How Intel’s Innovations Impact the Industry

Hybrid Architecture Leads the Future – It is competing with AMD’s Zen 5 & ARM-based processors.

AI-Optimized Computing – Intel is embedding AI capabilities directly into CPUs for real-time performance tuning.

More Efficient Power Management – Ideal for laptops, data centers, and cloud computing applications.

Intel vs. AMD: A Comparison of Latest Microarchitectures (Alder Lake, Raptor Lake vs. Zen 4, Zen 5)

Intel and AMD continue to push CPU performance boundaries. However, their architectural approaches differ significantly. Intel focuses on hybrid core designs and AI-driven optimizations. However, AMD prioritizes high-efficiency chiplets and cache innovations. Let us compare their latest architectures.

1️⃣ Performance Core Design: Hybrid vs. Homogeneous

Intel: Performance (P) & Efficiency (E) Cores (Hybrid Architecture)

Introduced in Alder Lake (12th Gen) & Raptor Lake (13th Gen)

Uses a mix of high-power P-cores and low-power E-cores for improved efficiency

Intel Thread Director dynamically assigns tasks to the right cores

Great for gaming, productivity, and background workloads

AMD: Homogeneous Zen 4 & Zen 5 Cores

AMD does not use hybrid cores; instead, all Zen cores are high-performance

Better multi-threading due to symmetrical core architecture

Ideal for server workloads, content creation, and gaming

Winner? Intel leads in power efficiency & multitasking. AMD excels in raw multi-core performance.

2️⃣ Process Node & Power Efficiency

Intel (Alder Lake, Raptor Lake, Meteor Lake, Arrow Lake)

Intel 7 (10nm Enhanced SuperFin) for Alder & Raptor Lake

Intel 4 (EUV-based) for Meteor Lake (14th Gen, 2024)

Intel 20A (RibbonFET & PowerVia) for Arrow Lake (15th Gen, 2025)

AMD (Zen 4 & Zen 5)

TSMC 5nm for Zen 4 CPUs (Ryzen 7000 series)

TSMC 4nm/3nm for upcoming Zen 5 (Ryzen 8000 series)

Chiplet-based design allows more cores per watt

Winner? AMD has better efficiency due to TSMC’s advanced nodes. However, Intel’s future RibbonFET and PowerVia may close the gap.

3️⃣ Cache & Memory Innovations

Intel’s Approach

Intel increased L2 cache size per core in Raptor Lake

Supports DDR5 & PCIe 5.0. However, it still maintains DDR4 compatibility

Meteor Lake uses AI-enhanced cache prefetching

AMD’s Approach

3D V-Cache (L3 cache stacking) massively boosts gaming performance (Ryzen 7 7800X3D)

Zen 4 supports DDR5 & PCIe 5.0 but drops DDR4

Higher cache per core = better latency-sensitive performance

Winner? AMD dominates in cache-intensive workloads (gaming, AI, data processing), while Intel balances cache with hybrid efficiency.

4️⃣ AI & Machine Learning Performance

Intel’s AI Features

Intel AI Boost in Meteor Lake (14th Gen) – hardware-level AI acceleration

Thread Director uses AI-based workload optimization

Built-in VNNI (Vector Neural Network Instructions) for AI inferencing

AMD’s AI Features

Ryzen AI (XDNA AI Engine) for Zen 4 & Zen 5

Better AI processing on APUs (Ryzen 7000 mobile chips)

AMD’s AVX-512 optimizations improve deep-learning tasks

Winner? Intel’s AI integration at the CPU level is ahead. However, AMD’s APUs are better for AI-based edge computing.

5️⃣ Gaming Performance & Multitasking

Intel (Best for High FPS Gaming & Single-Threaded Workloads)

Higher clock speeds (5.8 GHz+ on Raptor Lake)

Strong single-core IPC performance

Hybrid cores + Thread Director optimize gaming + background tasks

Meteor Lake may include AI-powered frame pacing enhancements

AMD (Best for Smooth Performance & Future-Proofing)

3D V-Cache CPUs (Ryzen 7 7800X3D) outperform Intel in gaming

Better multi-threading & power efficiency

Zen 5 expected to boost IPC significantly

Winner? Intel leads in raw FPS and hybrid optimization. However, AMD wins in gaming stability with 3D V-Cache.

6️⃣ Future Roadmap & Innovations

Intel’s Upcoming CPUs

Meteor Lake (14th Gen, 2024) – First tile-based (chiplet) Intel CPU

Arrow Lake (15th Gen, 2025) – New core architectures & AI optimizations

Lunar Lake (2026) – Extreme efficiency + AI-focused design

AMD’s Upcoming CPUs

Zen 5 (2024) – Higher IPC, AVX-512, AI-enhanced processing

Zen 6 (2025/26) – Further improvements in efficiency & chiplet interconnects

Winner? Both brands are innovating rapidly. However, Intel’s Meteor & Arrow Lake may redefine AI-integrated computing.

Final Verdict: Intel vs. AMD – Which is Better?

| Feature | Intel (Alder/Raptor/Meteor Lake) | AMD (Zen 4 / Zen 5) |

| Power Efficiency | Improving with Meteor Lake | More efficient with TSMC 5nm |

| Single-Core Speed | Best for gaming & tasks | High IPC but slightly lower GHz |

| Multi-Core Power | Efficient Hybrid approach | Superior in multi-threading |

| Cache & Memory | Balanced cache, AI prefetching | 3D V-Cache dominates gaming |

| AI Performance | AI Boost, VNNI, Thread Director | Strong AI in APUs, Ryzen AI |

| Future Roadmap | Meteor/Arrow Lake innovations | Zen 5/Zen 6 promising upgrades |

Which One Should You Choose?

For Gaming: AMD (3D V-Cache models excel in FPS stability)

For Productivity & Multitasking: Intel (Hybrid cores + AI scheduling help)

For AI & Future-Proofing: Intel (AI integration in Meteor/Arrow Lake)

For Workstations/Servers: AMD (Better multi-threading & chiplet scalability)

AMD’s Approach to High-Performance & Efficiency – Zen Microarchitectures and 3D V-Cache Technology

AMD has revolutionized CPU performance and efficiency with its Zen microarchitecture. It focuses on high core counts, efficient power usage, and innovative cache designs. The introduction of 3D V-Cache technology has further enhanced performance. That is particularly in gaming and latency-sensitive applications. Let us explore AMD’s strategy in depth.

1️⃣ Evolution of AMD’s Zen Microarchitectures

AMD’s Zen microarchitecture was first introduced in 2017. It marked a shift from its older Bulldozer-based CPUs. With each generation, AMD has refined IPC (Instructions Per Cycle), power efficiency, and core scalability.

Key Zen Generations & Improvements

| Zen Generation | Release Year | Process Node | Key Innovations |

| Zen (1st Gen) | 2017 | 14nm | SMT (Simultaneous Multithreading), Chiplet Design |

| Zen+ | 2018 | 12nm | Lower latency, better efficiency |

| Zen 2 | 2019 | 7nm | Double the floating-point performance, PCIe 4.0 |

| Zen 3 | 2020 | 7nm | Unified CCX (Core Complex), 19% IPC uplift |

| Zen 4 | 2022 | 5nm | DDR5, PCIe 5.0, AVX-512 support |

| Zen 5 | 2024 (Upcoming) | 4nm/3nm | AI & ML optimizations, higher IPC |

Key Takeaway: Each Zen generation has improved IPC, cache structure, and energy efficiency. Zen generations make AMD CPUs highly competitive against Intel.

2️⃣ Chiplet-Based Architecture: Scalable & Efficient Design

AMD’s chiplet design allows higher core counts and better manufacturing efficiency compared to Intel’s monolithic CPUs.

Separation of Compute and I/O Dies:

- CPU cores reside in separate Core Complex Dies (CCDs)

- I/O functions (PCIe, memory controller) are handled by a separate IOD

- Improves scalability & reduces manufacturing costs

Advantages Over Monolithic Designs:

- Better yield rates & cost-effectiveness (smaller chiplets = fewer defects)

- More cores per CPU (Ryzen 9 7950X has 16 cores)

- Scalability for desktop, server, and mobile platforms

Why It Matters: AMD can pack more cores and cache at lower costs than Intel’s monolithic CPU design.

3️⃣ 3D V-Cache: Game-Changing Cache Technology

AMD introduced 3D V-Cache to enhance gaming performance and latency-sensitive applications.

What Is 3D V-Cache?

- A stacked L3 cache technology that increases total cache size without increasing core count

- Reduces data-fetching latency from RAM. 3D V-Cache improves performance

Impact on Gaming & Workloads:

| CPU | L3 Cache | Performance Gain |

| Ryzen 7 7800X3D | 96MB (vs. 32MB) | 25–30% higher gaming FPS |

| Ryzen 9 7950X3D | 128MB | Improved content creation & AI workloads |

Why It Matters: 3D V-Cache significantly boosts gaming FPS and real-time processing workloads. 3D V-Cache makes it a game-changer for performance enthusiasts.

4️⃣ AMD’s Power Efficiency: Beating Intel with TSMC’s Process Nodes

AMD’s power efficiency advantage comes from its use of TSMC’s cutting-edge fabrication nodes and smart power management techniques.

Zen 4 (Ryzen 7000 Series) Power Efficiency:

- Built on a 5nm TSMC node (vs. Intel’s 10nm)

- Up to 35% more performance per watt than Intel Alder Lake/Raptor Lake

- Lower power draw in idle & light workloads

Zen 5 & Future Power Efficiency Improvements:

- Expected to use 4nm/3nm process

- Dynamic frequency scaling for AI-driven workloads

- Even better power-to-performance ratio

Why It Matters: AMD’s smaller nodes and efficient chiplet design make Ryzen CPUs run cooler and use less power than Intel’s hybrid-core architecture.

Why AMD’s Zen Strategy Works

AMD’s Zen microarchitectures and 3D V-Cache technology have redefined CPU performance. That is more particularly for gaming, AI, and energy-efficient computing.

Zen’s Strengths Over Intel:

- More cores & cache per watt

- Better scalability with chiplet design

- Superior gaming performance with 3D V-Cache

- Lower power consumption due to advanced TSMC nodes

Who Should Choose AMD?

- Gamers & Creators: Ryzen 7800X3D & 7950X3D dominate gaming & content workloads

- Power-Efficient Users: Lower-wattage CPUs run cooler with great efficiency

- AI & ML Enthusiasts: Zen 5 will further optimize AI-driven tasks

ARM’s Role in Mobile & Low-Power Computing – How ARM’s Efficiency is Shaping Mobile and Server Processors

The ARM microarchitecture has transformed computing by prioritizing power efficiency, scalability, and performance. Originally it was designed for mobile devices. ARM-based processors are now reshaping laptops, servers, and AI workloads. It is challenging traditional x86 architectures from Intel and AMD.

Let us explore how ARM is revolutionizing low-power computing. In addition, let us explore its impact on mobile and server markets and why tech giants like Apple, Qualcomm, and NVIDIA are embracing ARM.

1️⃣ What Makes ARM Different? The Power-Efficient RISC Architecture

Unlike x86 CPUs, ARM processors use a RISC (Reduced Instruction Set Computing) architecture.

RISC allows:

Simpler, more efficient instructions → Faster execution & lower power consumption

Lower transistor count → Less heat generation & better battery life

Customizability → Companies can design tailored ARM cores for specific needs

Why It Matters: ARM’s efficiency-first approach makes it ideal for mobile devices, IoT, and power-sensitive computing.

2️⃣ ARM’s Dominance in Mobile Computing

ARM dominates smartphones, tablets, and embedded devices due to its energy-efficient cores.

Key advantages of ARM:

Optimized Power Efficiency → Extends battery life in mobile devices

Custom CPU Designs → Apple (M-series), Qualcomm (Snapdragon), and Google (Tensor) create custom ARM-based SoCs

High Performance with Low Heat → Ideal for thin and fanless devices

Apple’s ARM-Based M-Series Chips: A Game Changer

Apple’s shift from Intel to ARM-based M-series chips (M1, M2, and M3) highlights ARM’s potential in laptop and desktop computing:

| Chip | Performance | Power Efficiency |

| M1 | Outperformed Intel Core i7 | 2x battery life |

| M2 | 18% faster CPU, 35% faster GPU | More efficient memory bandwidth |

| M3 (Upcoming) | AI & ML optimizations | 3nm node for better efficiency |

Why It Matters: ARM is no longer limited to mobile. Apple’s success proves it can rival x86 in mainstream computing.

3️⃣ ARM’s Growing Presence in Servers & Cloud Computing

ARM’s low-power, high-efficiency architecture is gaining traction in data centers. It is competing with Intel Xeon and AMD EPYC.

Cloud Providers Adopting ARM:

- Amazon AWS Graviton CPUs – Up to 40% better price-performance than x86

- Google’s ARM-based Tau T2A instances – Designed for cloud workloads

- Microsoft’s Azure ARM servers – Optimized for AI and big data

Why ARM is Ideal for Data Centers:

- Lower power consumption → Reduces cooling costs

- Scalable & multi-core performance → Great for distributed workloads

- Open licensing model → Companies can customize CPU cores

Key Takeaway: ARM is now powering cloud giants. That proves it is more than just a mobile architecture.

4️⃣ The Future of ARM: AI, IoT & Automotive Computing

Beyond mobile and servers, ARM is expanding into AI, IoT, and automotive industries.

AI & ML Acceleration:

- ARM’s Ethos-NPU (Neural Processing Unit) optimizes machine learning workloads

- Apple’s M-series Neural Engine enhances AI processing

IoT & Edge Computing:

- Low-power ARM chips are ideal for smart devices, sensors, and robotics

Automotive Industry:

- Tesla, NVIDIA, and Qualcomm use ARM-based self-driving processors

Why It Matters: ARM’s energy-efficient AI acceleration will drive the future of smart computing.

Will ARM Overtake x86?

ARM’s dominance in mobile, laptops, and cloud computing is undeniable. However, can it replace x86 in all markets?

ARM’s Strengths:

- Power-efficient & scalable

- Customizable CPU designs

- Leading in mobile, AI, and cloud

Challenges:

- Software compatibility issues for x86 apps

- High-performance desktops & gaming still favor x86

The Bottom Line: ARM is reshaping modern computing. With its growing adoption in laptops, cloud, and AI, it could eventually challenge x86 across all sectors.

The Future of CPU Microarchitecture Predictions

What is Next for Processors?

Speculating on Upcoming Technologies Like 3nm and 2nm Fabrication

The future of CPU microarchitecture is rapidly evolving. It is driven by advancements in transistor scaling, power efficiency, AI integration, and chip design innovations. As the industry approaches the physical limits of Moore’s Law, manufacturers are finding new ways to boost performance while reducing power consumption.

Let us explore key trends shaping the future of processors. Further, let us explore more about3nm and 2nm fabrication, advanced packaging, AI-driven architectures, and new computing paradigms.

1️⃣ The Shift to 3nm and 2nm Process Nodes

What Does a Smaller Node Mean?

Process nodes refer to the size of transistors on a chip. A smaller node allows:

More transistors per chip → Higher performance & efficiency

Lower power consumption → Longer battery life & reduced heat

Better density → Enables AI, 5G, and HPC (High-Performance Computing)

3nm Process: Already Here

Leading chipmakers like TSMC, Samsung, and Intel have begun mass production of 3nm chips in 2024:

- Apple M3 & A17 Pro (TSMC 3nm) – Improved power efficiency in MacBooks & iPhones

- Intel’s 20A (equivalent to 3nm) – Expected in 2025. Introducing RibbonFET & PowerVia technology

- Samsung 3nm GAA (Gate-All-Around) – Enhances transistor control for higher efficiency

2nm & Beyond: The Next Big Leap

- TSMC plans 2nm mass production by 2025

- IBM has already demonstrated a 2nm chip with 45% better performance & 75% lower power consumption

- Intel’s 18A process (~1.8nm) is expected in 2026

Why It Matters: Shrinking transistors will push CPUs to new performance levels. However, challenges like quantum effects and power leakage require new materials and designs.

2️⃣ Beyond Traditional Scaling: 3D Stacking & Chiplet Designs

With transistor miniaturization slowing, CPU manufacturers are turning to advanced packaging techniques:

3D Stacking (Foveros, Hybrid Bonding) → Improves chip density & interconnect speed

Chiplet-Based Designs → Boost scalability while reducing manufacturing costs

Monolithic vs. Modular Approach → AMD’s chiplet-based Zen CPUs have already proven superior in performance-per-watt

What’s Next?

Intel’s Foveros Direct Bonding and TSMC’s SoIC technology will drive 3D-stacked chips. However, AMD and NVIDIA continue refining chiplet-based architectures.

3️⃣ AI-Driven CPU Optimization: A Smarter Future

Artificial Intelligence (AI) is playing a bigger role in CPU microarchitecture optimization:

Dynamic Power Management – AI algorithms adjust clock speeds & voltages in real-time

Predictive Workload Scheduling – Improves CPU efficiency based on machine learning models

Neural Processing Units (NPUs) – Integrated AI accelerators in Intel Meteor Lake & AMD Ryzen AI

Future Trend: AI will enable self-optimizing processors. That is reducing power wastage and enhancing adaptive performance.

4️⃣ Quantum & Photonic Computing: A Long-Term Vision

The traditional transistor-based CPUs dominate. Further research in quantum and photonic computing is accelerating:

Quantum Processors – Google, IBM, and Intel are exploring qubit-based computing

Photonic CPUs – Use light instead of electricity. Photonic CPU enables ultra-fast, low-power computing

Reality Check: These technologies are still in the early research stages. However, these could redefine computing in 10-20 years.

What to Expect in the Next Decade?

By 2025-2030, we will likely see:

Mainstream 2nm CPUs with extreme power efficiency

More chiplet-based & 3D-stacked processors

AI-driven optimization making CPUs smarter

Potential breakthroughs in quantum & optical computing

The future of CPU microarchitecture is exciting, with new materials, AI integration, and radical designs set to redefine performance, efficiency, and computing capabilities.

The Role of Quantum & Neuromorphic Computing

Exploring Potential Post-Silicon Computing Paradigms

Traditional silicon-based CPU microarchitecture approaches its physical limits. Therefore, researchers and tech giants are actively exploring post-silicon computing paradigms. Two of the most promising technologies are Quantum Computing and Neuromorphic Computing.

These emerging technologies could revolutionize computing power, efficiency, and problem-solving capabilities beyond what current architectures allow. Let us dive into their significance and future potential.

1️⃣ Quantum Computing: A Leap Beyond Classical CPUs

Quantum computing is not an evolution of traditional CPUs. However, it is rather a completely new paradigm. Unlike classical processors that use binary bits (0s and 1s), quantum computers use qubits that can exist in multiple states simultaneously (thanks to superposition).

How Quantum Computing Works

Superposition → Qubits can be 0, 1, or both at the same time

Entanglement → Qubits influence each other instantaneously. That is enabling ultra-fast calculations

Quantum Parallelism → Allows solving complex problems exponentially faster

Potential Applications

Cryptography & Cybersecurity – Quantum computers could break traditional encryption. That is leading to new quantum-safe security methods

Drug Discovery & Material Science – Simulating molecular interactions at an atomic level

Financial Modeling – Optimizing complex calculations for banking & stock markets

Artificial Intelligence – Speeding up AI model training & optimization

Challenges of Quantum CPUs

Error Rates & Stability – Qubits are highly sensitive to environmental disturbances

Cooling Requirements – Requires near absolute zero (-273°C) temperatures

Limited Scalability – Current quantum computers have only a few hundred qubits

What’s Next?

Major players like Google, IBM, Intel, and D-Wave are working on fault-tolerant, scalable quantum processors. IBM has already unveiled a 1,121-qubit processor (Condor). Further, it comes with plans to reach 10,000+ qubits in the 2030s.

2️⃣ Neuromorphic Computing: Brain-Inspired Processors

Traditional CPUs process data sequentially. However neuromorphic computing mimics the human brain’s neural networks to achieve massive parallelism and energy efficiency.

How Neuromorphic Processors Work

Uses artificial neurons & synapses (spiking neural networks – SNNs)

Processes data in parallel, like biological brains

Requires minimal power compared to classical CPUs

Benefits of Neuromorphic Computing

Extreme Energy Efficiency – Operates at milliwatt power levels (ideal for IoT & edge AI)

Real-Time Learning – Can adapt to new information without retraining

Faster AI Inference – Ideal for autonomous systems, robotics, and smart sensors

Real-World Applications

Edge AI & IoT Devices – Ultra-low power AI processing in smartphones, wearables, and cameras

Autonomous Vehicles & Robotics – Real-time decision-making with minimal power consumption

Healthcare & Brain-Machine Interfaces – Advanced diagnostics & neuroprosthetics

Leading Neuromorphic Chips

Intel Loihi – It is a neuromorphic chip with 128,000 neurons. Loihi enables ultra-efficient AI tasks

IBM TrueNorth – Brain-inspired processor with 1 million neurons

SpiNNaker (University of Manchester) – Designed for real-time brain simulation

What’s Next?

Neuromorphic chips will likely complement classical CPUs & GPUs rather than replace them. With AI-driven applications growing, these chips could transform edge computing & real-time AI systems.

Future Outlook: Quantum vs. Neuromorphic Computing

| Feature | Quantum Computing | Neuromorphic Computing |

| Inspired By | Quantum mechanics | Human brain |

| Processing Type | Probabilistic & parallel | Event-driven & Parallel |

| Best For | Cryptography, AI, scientific simulations | Low-power AI, real-time learning, robotics |

| Maturity Level | Early-stage (still in research) | Closer to commercialization |

| Key Players | IBM, Google, Intel, D-Wave | Intel, IBM, Qualcomm, BrainChip |

Will These Replace Traditional CPUs?

Quantum Computing will revolutionize specific industries like cryptography, AI, and physics simulations. However, it will not replace traditional processors for everyday tasks.

Neuromorphic Computing will likely become an essential component in AI, robotics, and edge computing, co-existing with conventional CPUs.